Exploring

Latent

Spaces

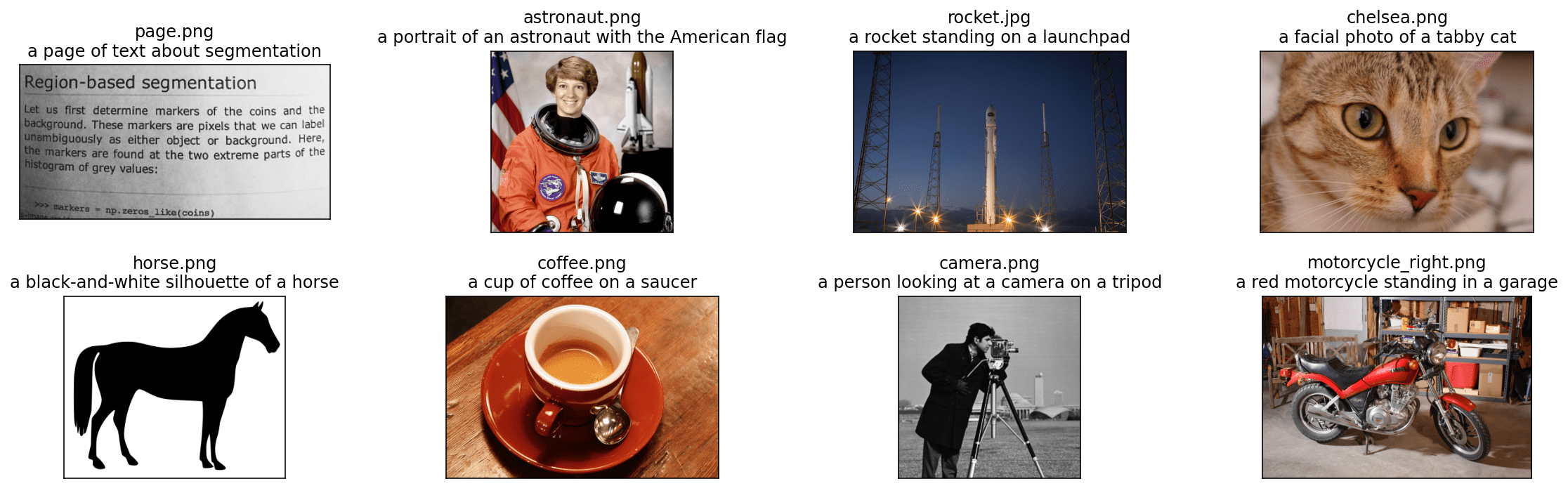

CLIP (2021)

https://github.com/OpenAI/CLIP

CLIP (2021)

https://github.com/OpenAI/CLIP

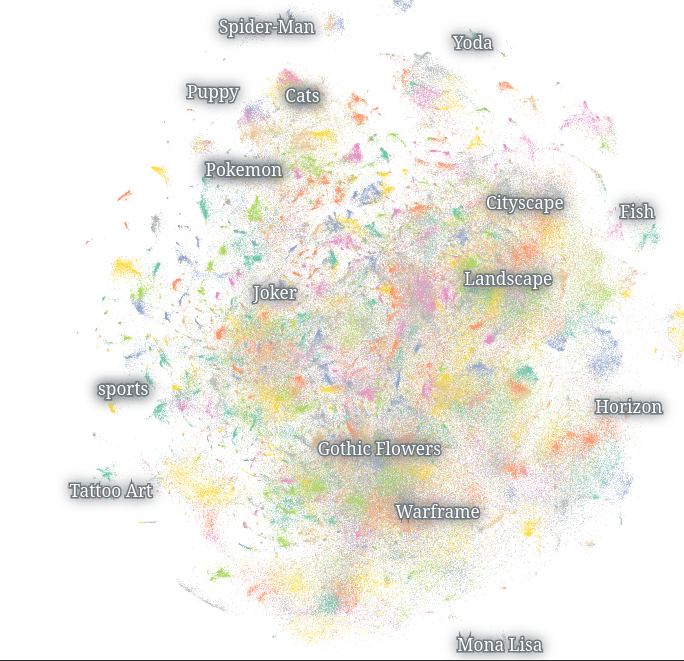

Stable Diffusion

“In practice, the people tinkering with CLIP don’t expect it to respond like a human reader. More to the point, they don’t want it to. They’re fascinated because CLIP uses language differently than a human individual would — mashing together the senses and overtones of words and refracting them into the potential space of internet images like a new kind of synesthesia. The pictures produced are fascinating, but (at least for now) too glitchy to impress most people as art. They’re better understood as postcards from an unmapped latent space.”

-- Ted Underwood, "Mapping the latent spaces of culture"

"Grace Hopper driving in a victory parade down fifth avenue"

https://huggingface.co/spaces/stabilityai/stable-diffusion

from nomic import AtlasDataset

dataset = AtlasDataset(

'andriy/krea-stable-diffusion-latent-space'

)

map = dataset.maps[0]

map.topics

map.duplicates

map.embeddings

map.data

# pandas dataframe

df = map.data.dfExercise

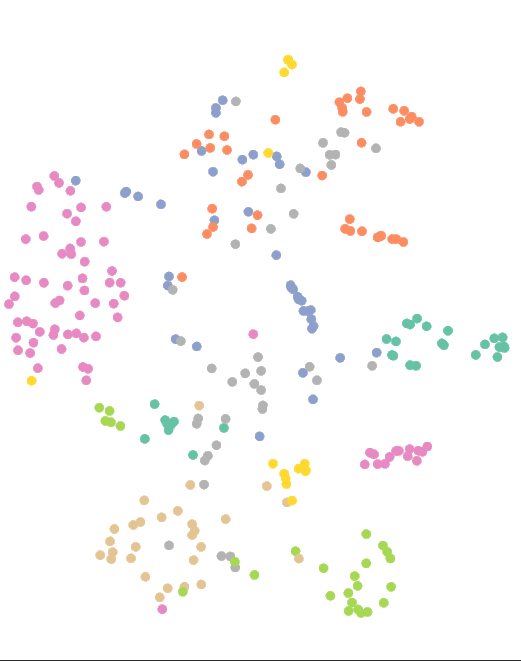

- If LLMs are models of culture, what can we learn from them about culture?

- Find three notable or surprising observations from the Krea data.

- How are they notable or significant?

- How do they speak to issues of culture?