\text{Intro to AutoEncoders and}

\textbf{Naresh Kumar Devulapally}

\text{CSE 4/573: Computer Vision and Image Processing}

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

\text{Lecture 13: July 15, 2025}

\text{Variational AutoEncoders}

CVIP 2.0

\text{AutoEncoders}

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

- Neural Networks (NNs) are feature extractors

- Feature Extraction using NNs recap

- AutoEncoders

- Transpose Convolutions

- AutoEncoders PyTorch Coding Example

- Inference in AutoEncoders

- Variational AutoEncoders

- Notation in Variational AutoEncoders (VAEs)

- ELBO in VAEs

- VAES PyTorch Coding Example

\( \text{Agenda of this Lecture:}\)

\text{July 10, 2025}

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

\text{Feature Extraction using NNs}

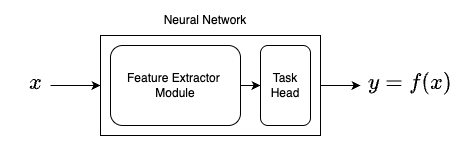

Neural Networks have two components:

- Feature Extractor Module

- Task specific head

You can experiment with simple neural networks at Tensorflow Playground

Usually extracted features are of

lower dimension than data (x)

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

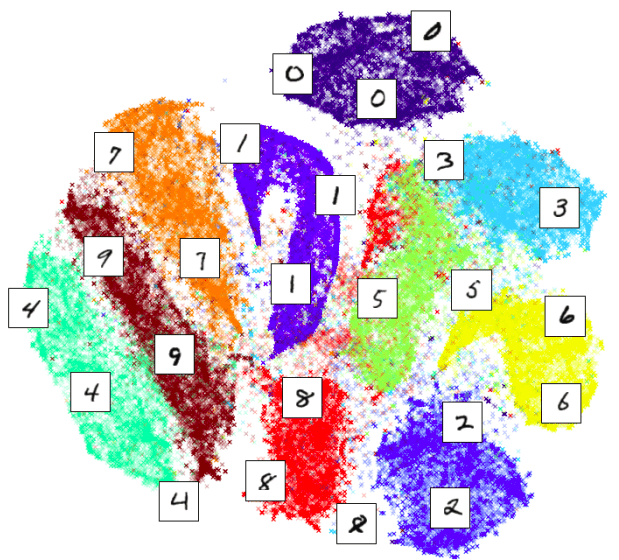

\text{Intro to GenAI: Data Distribution}

but, what does it mean when two images are closer to each other?

Closer in Low-Dimensional Feature Space

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

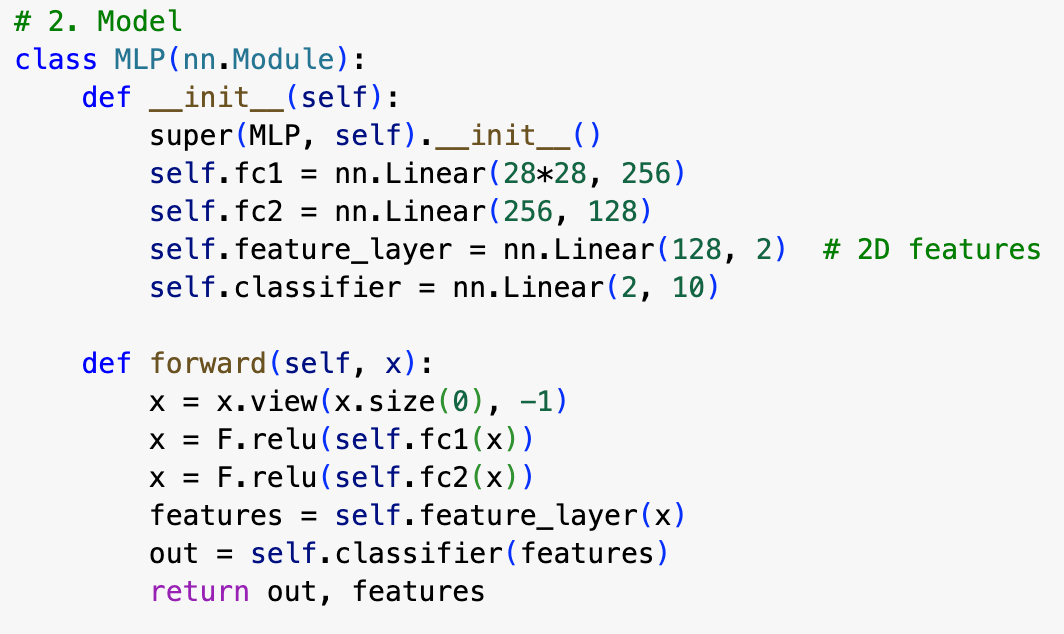

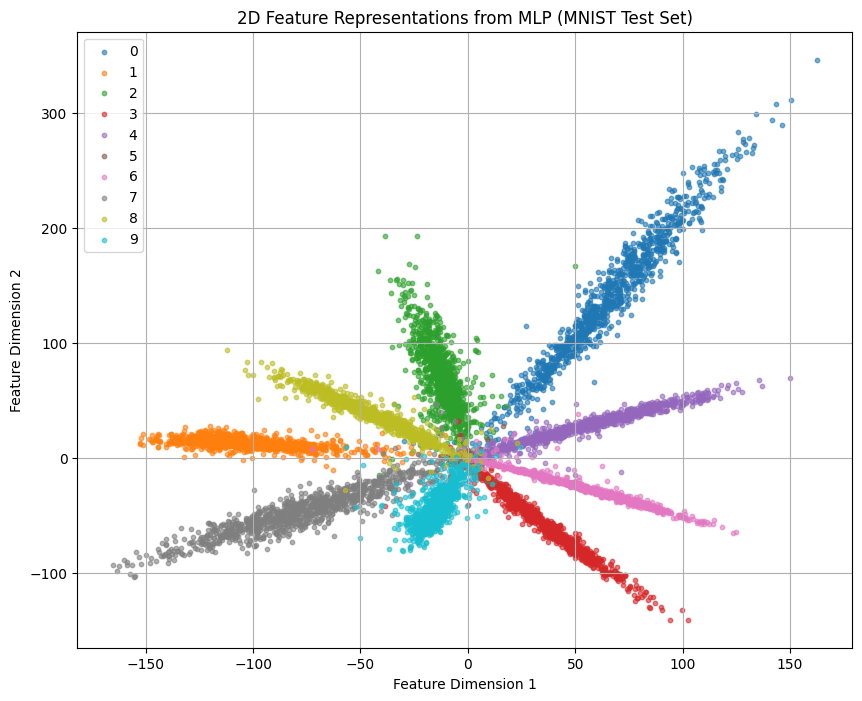

\text{PyTorch Code sample - Feature Extraction - NNs}

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

\text{PyTorch Code sample - Feature Extraction - NNs}

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

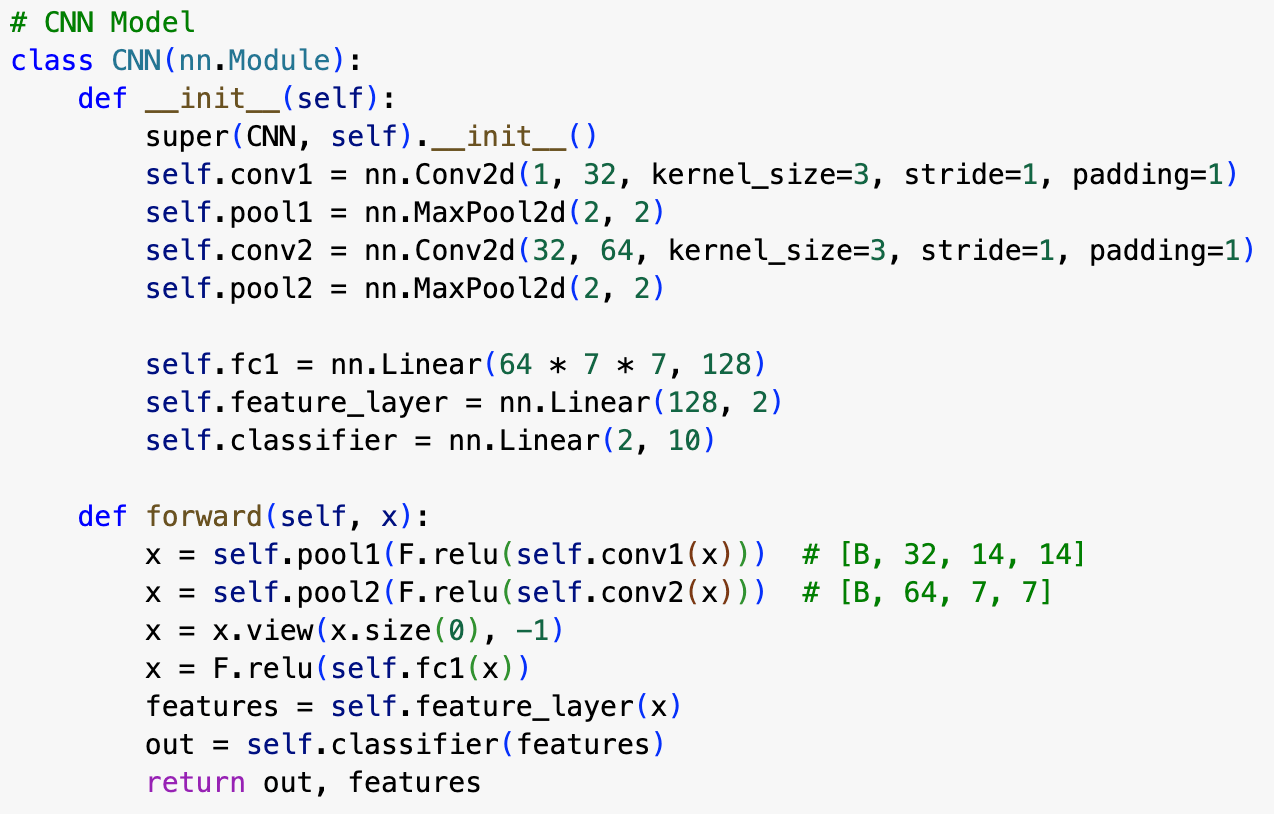

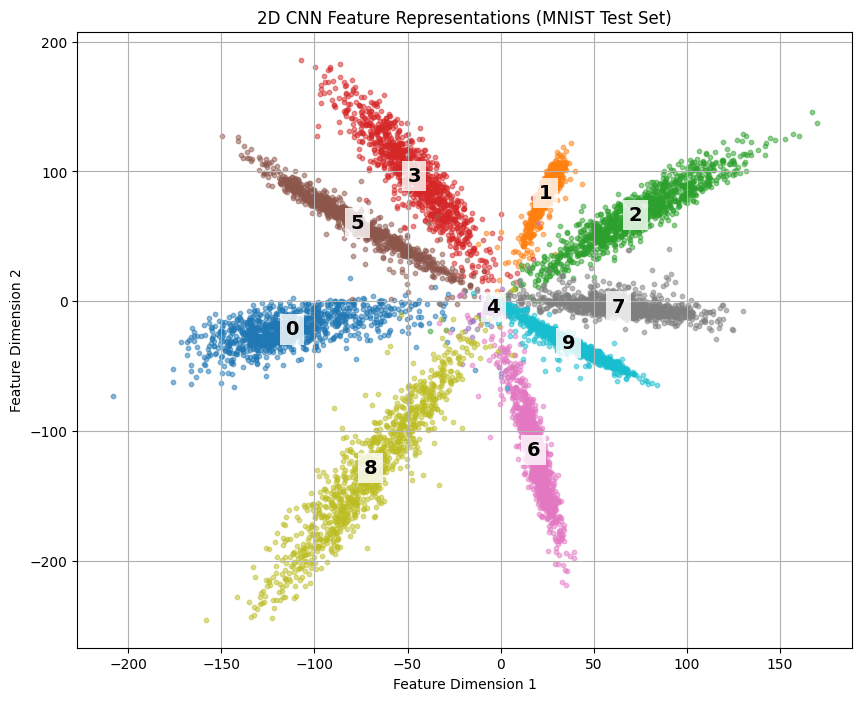

\text{PyTorch Code sample - Feature Extraction - CNNs}

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

\text{PyTorch Code sample - Feature Extraction - CNNs}

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

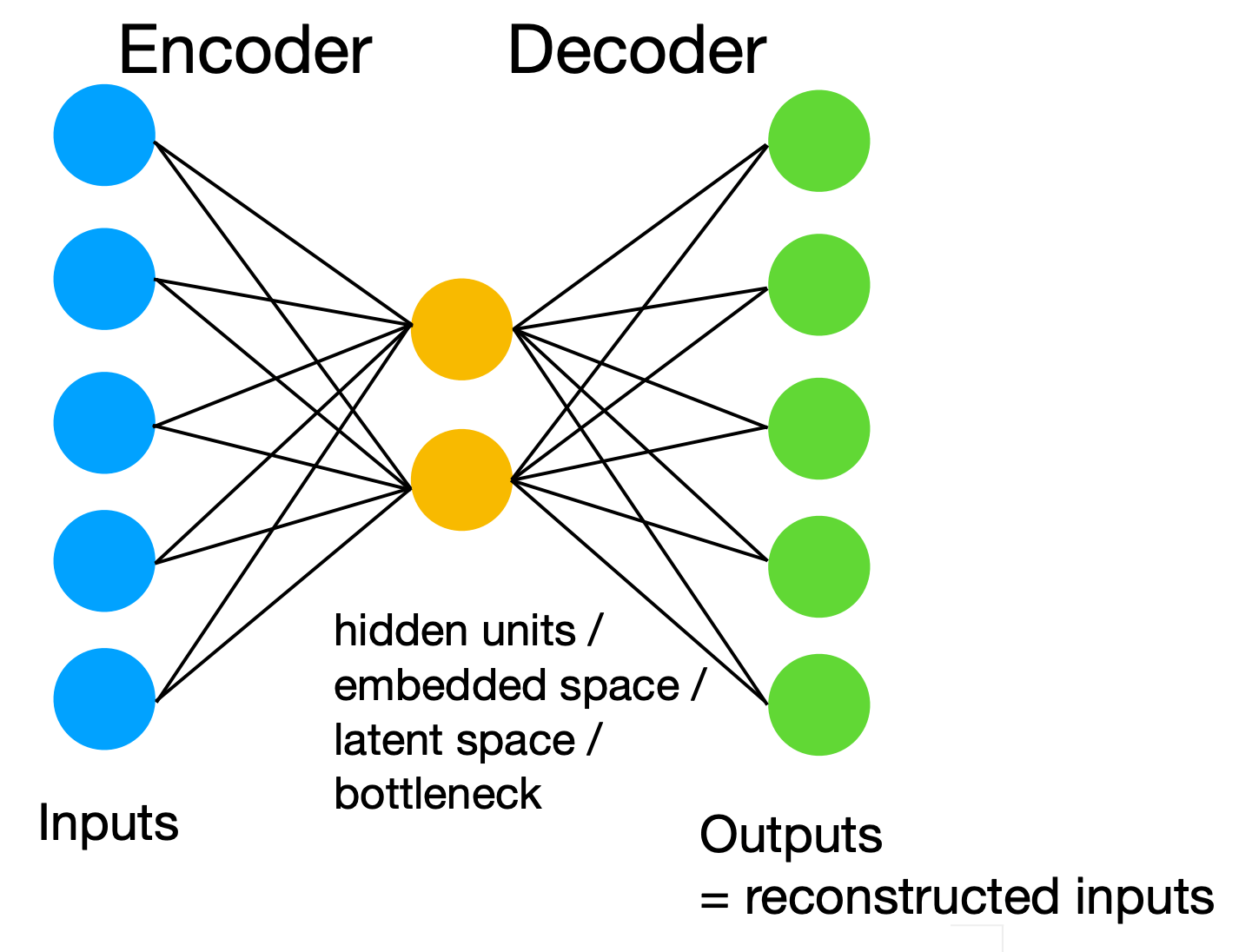

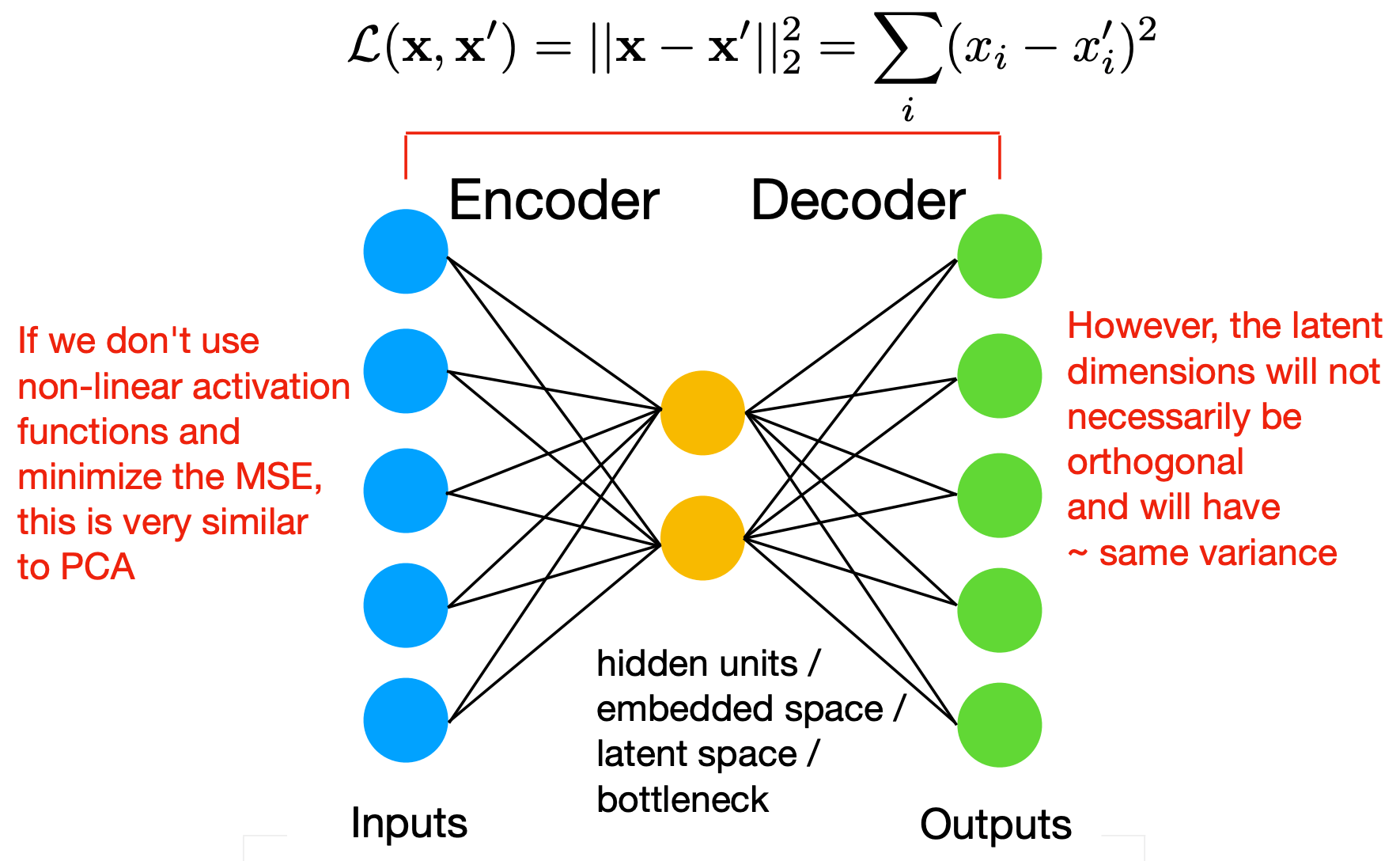

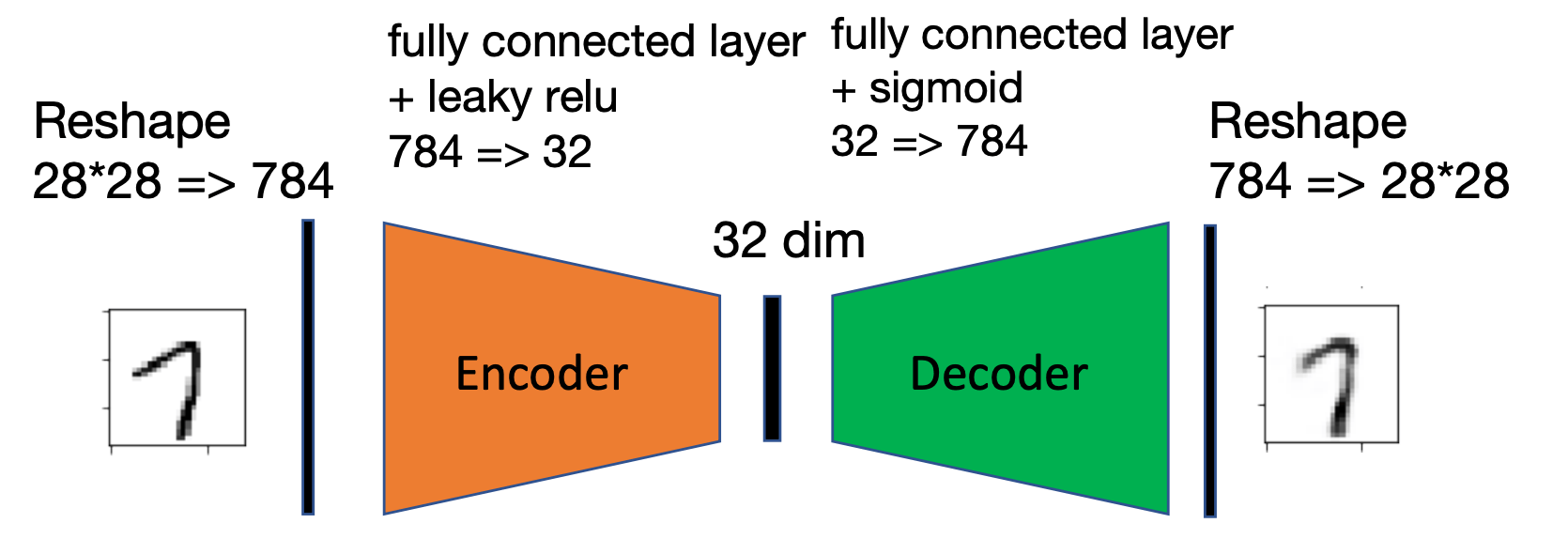

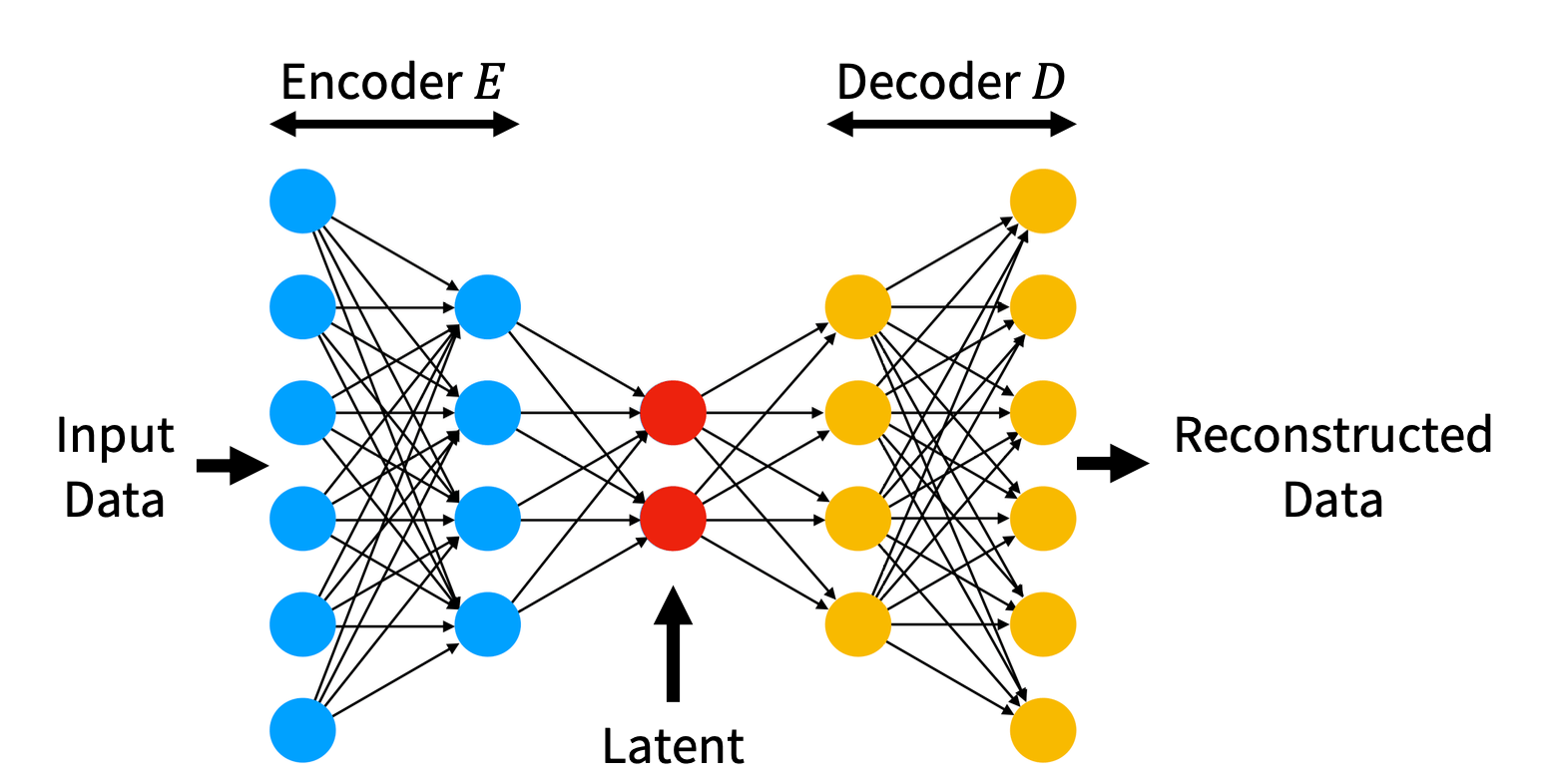

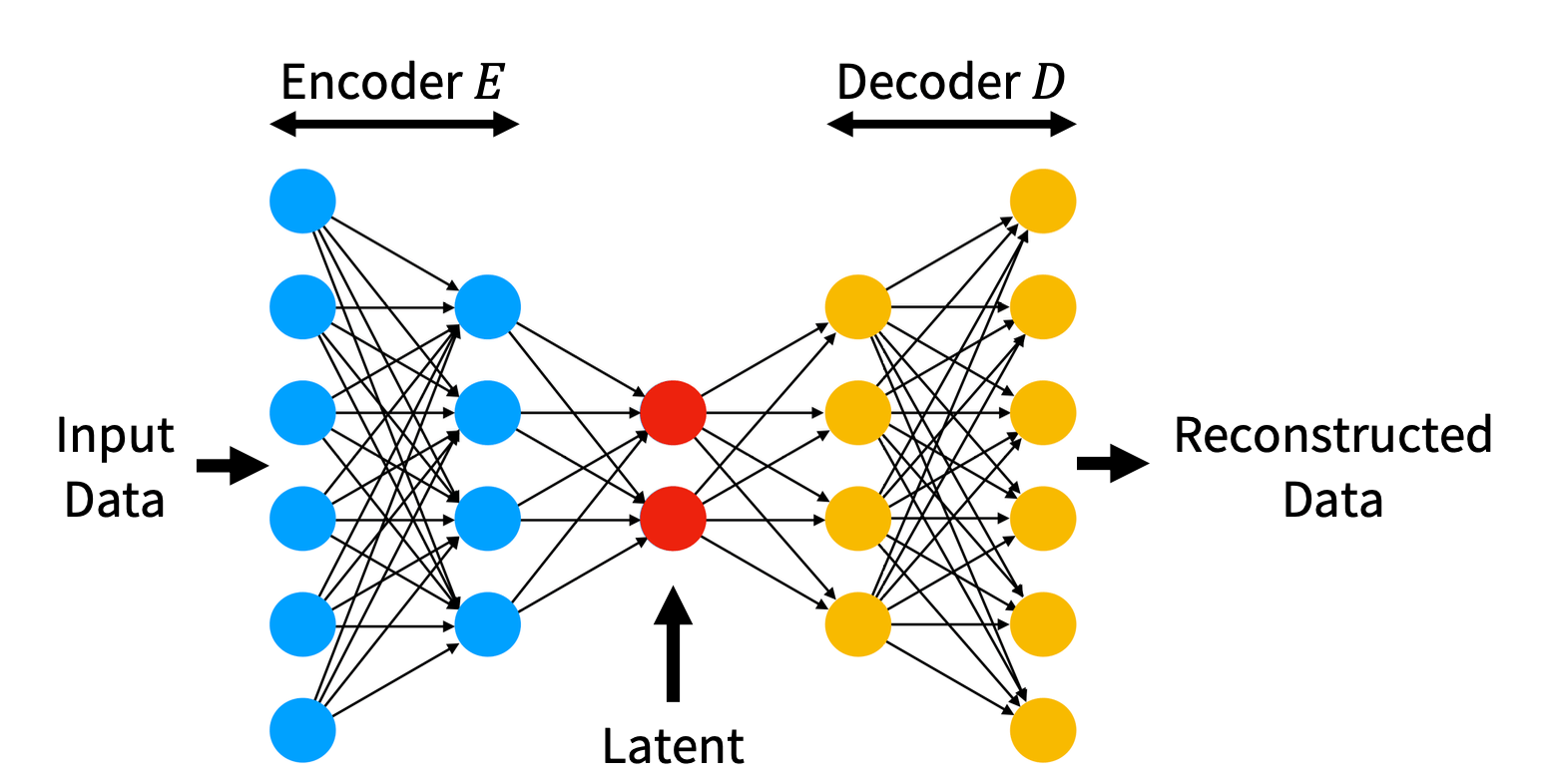

\text{AutoEncoders}

Simple MLP AutoEncoder

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

\text{AutoEncoders}

Simple MLP AutoEncoder

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

\text{AutoEncoders}

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

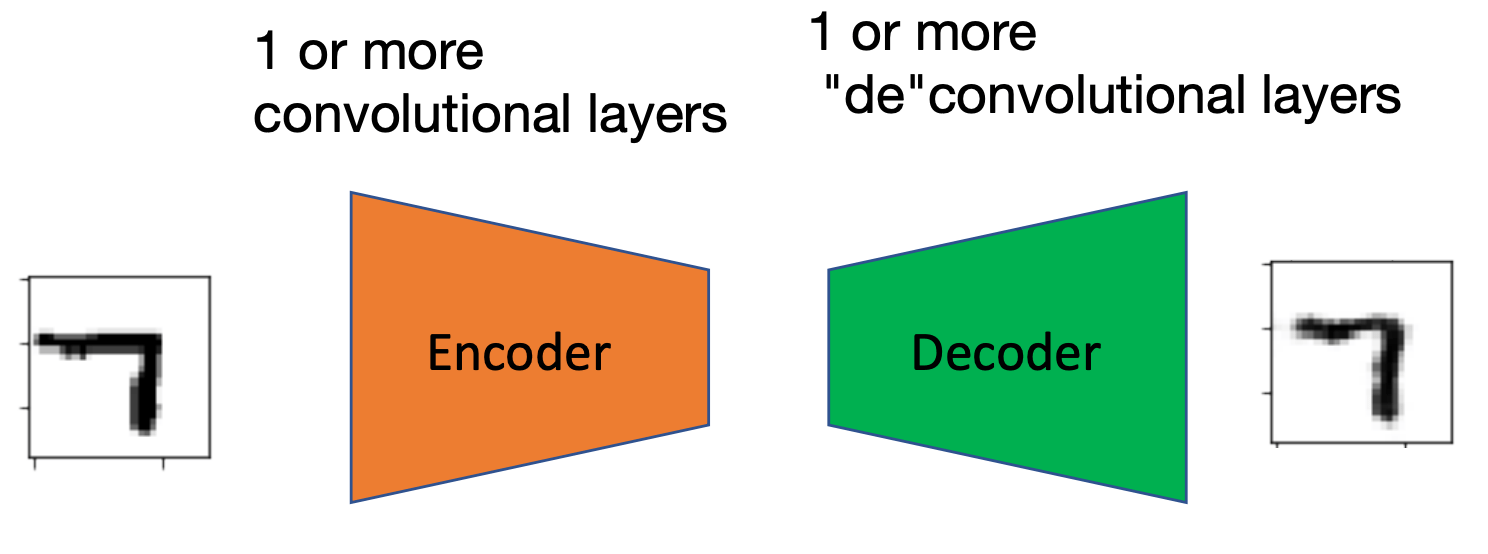

\text{AutoEncoders}

Convolutional AutoEncoder

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

\text{Variational AutoEncoders}

P(x \mid z)

P(z \mid x)

Posterior

Generative Model

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

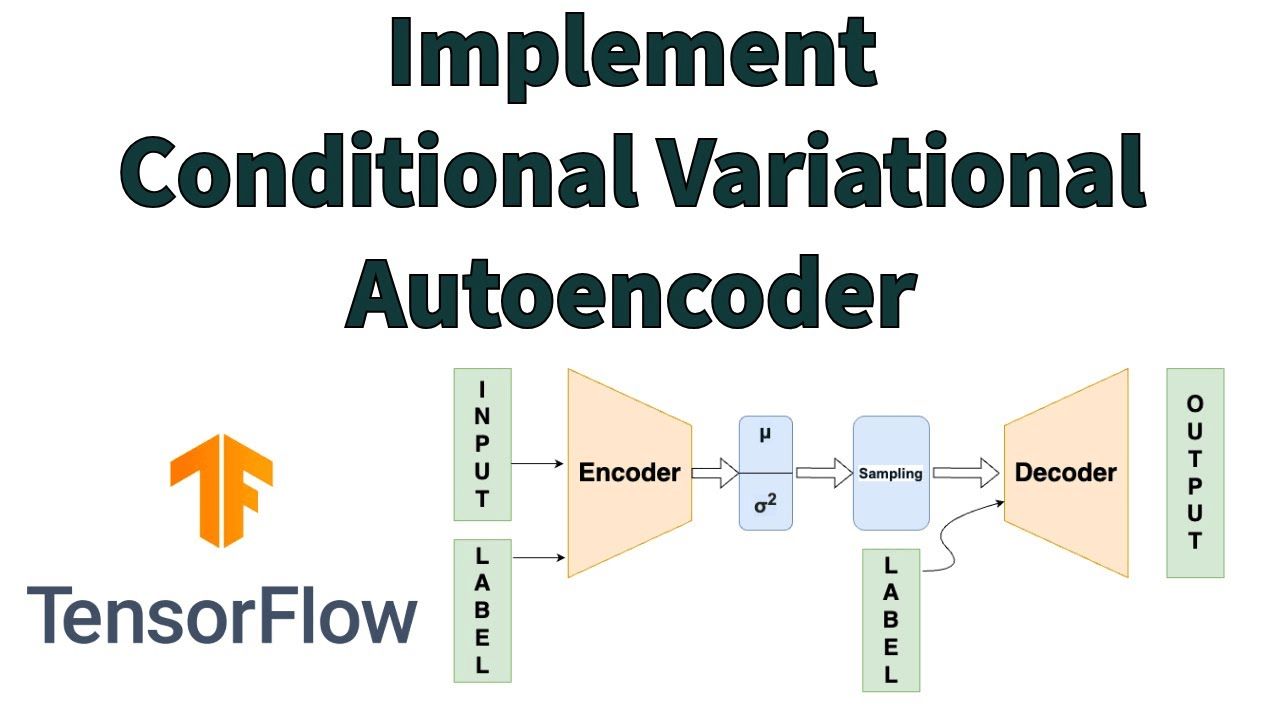

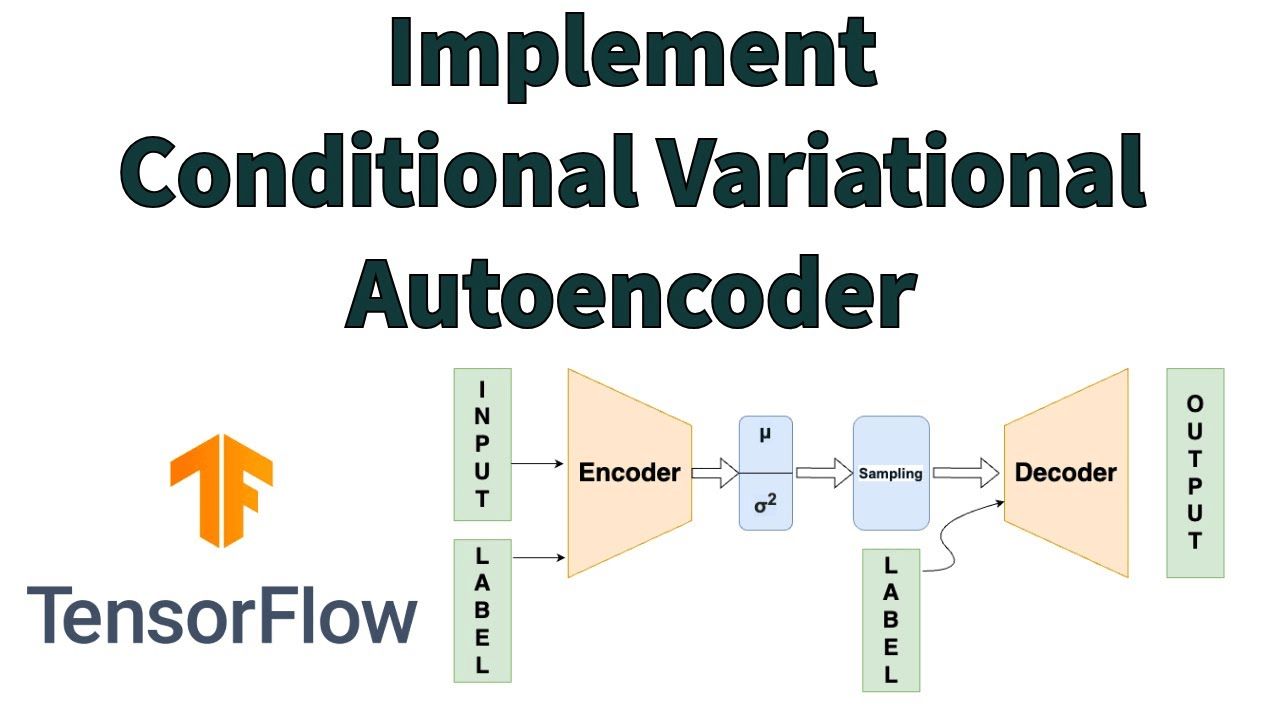

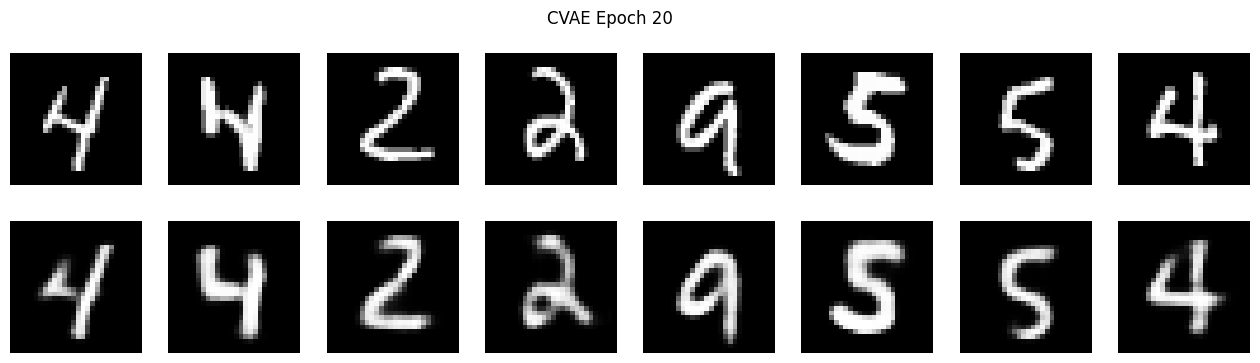

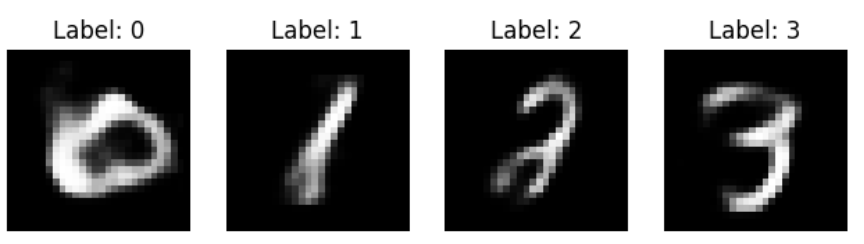

\text{Conditional Variational AutoEncoders}

P(x \mid z)

P(z \mid x)

Posterior

Generative Model

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

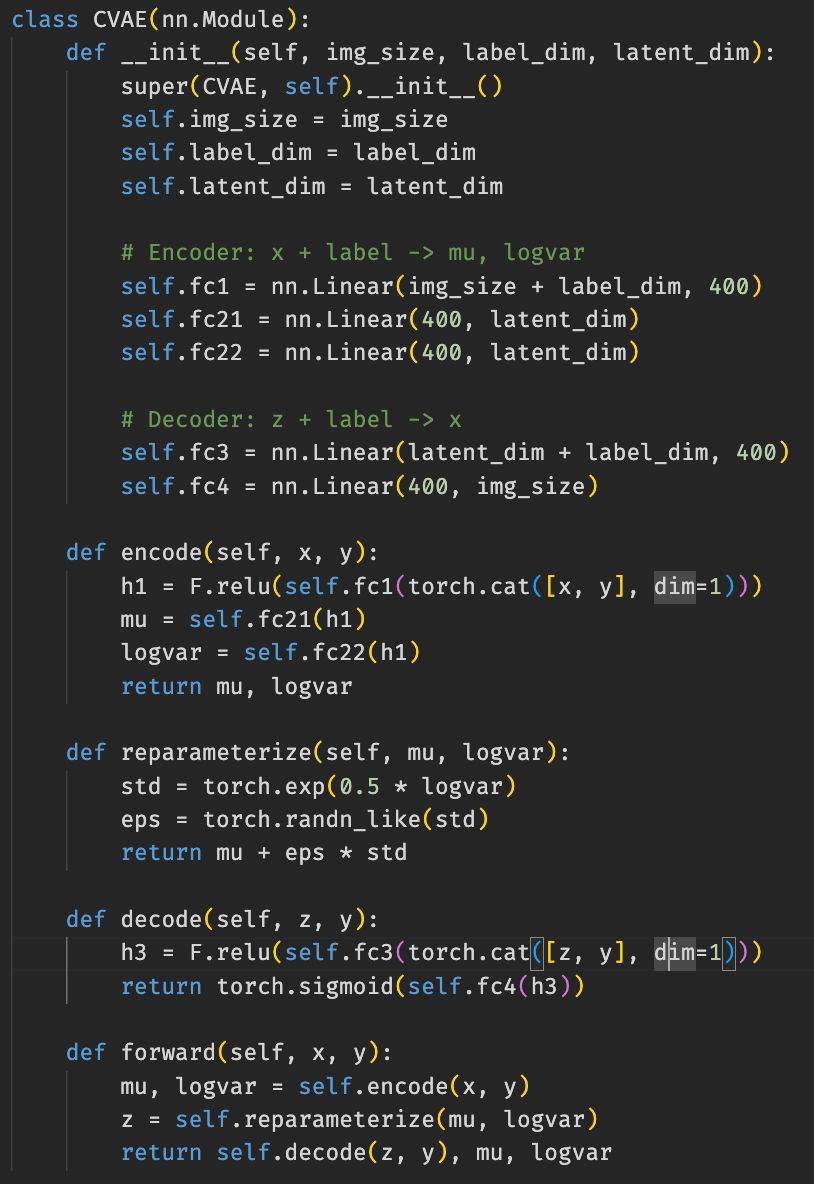

\text{Conditional Variational AutoEncoders}

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

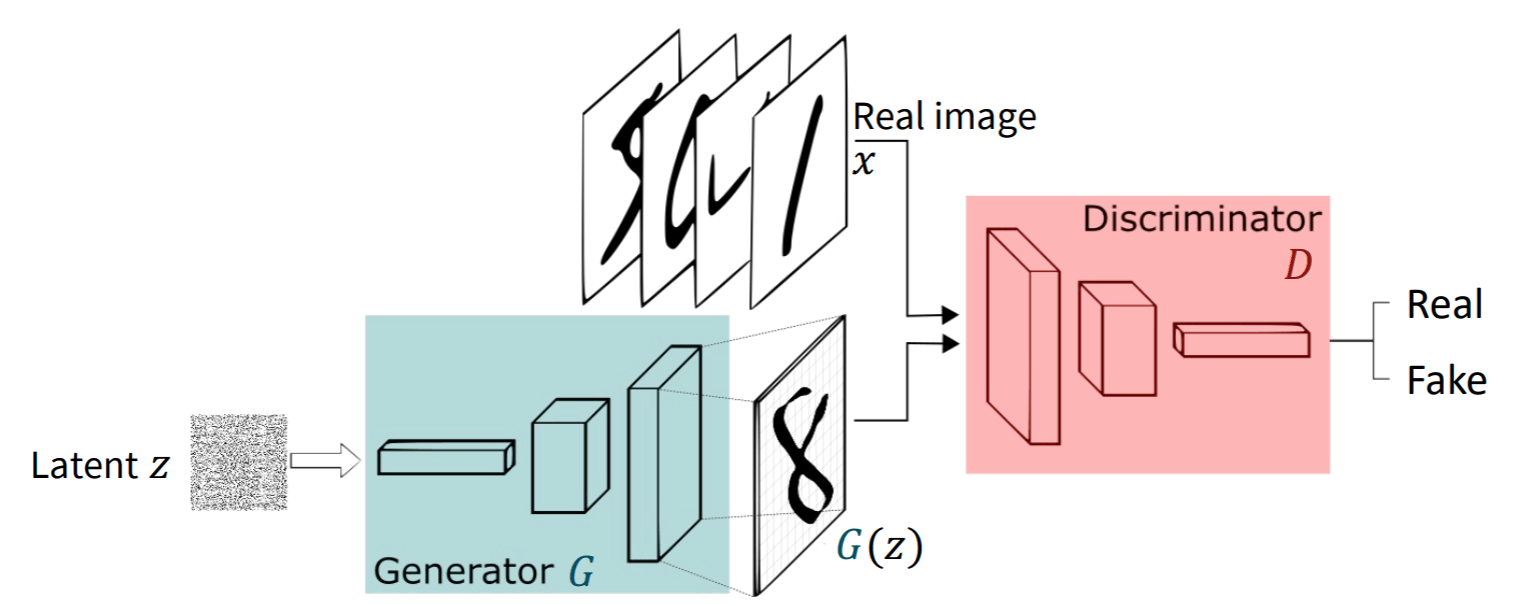

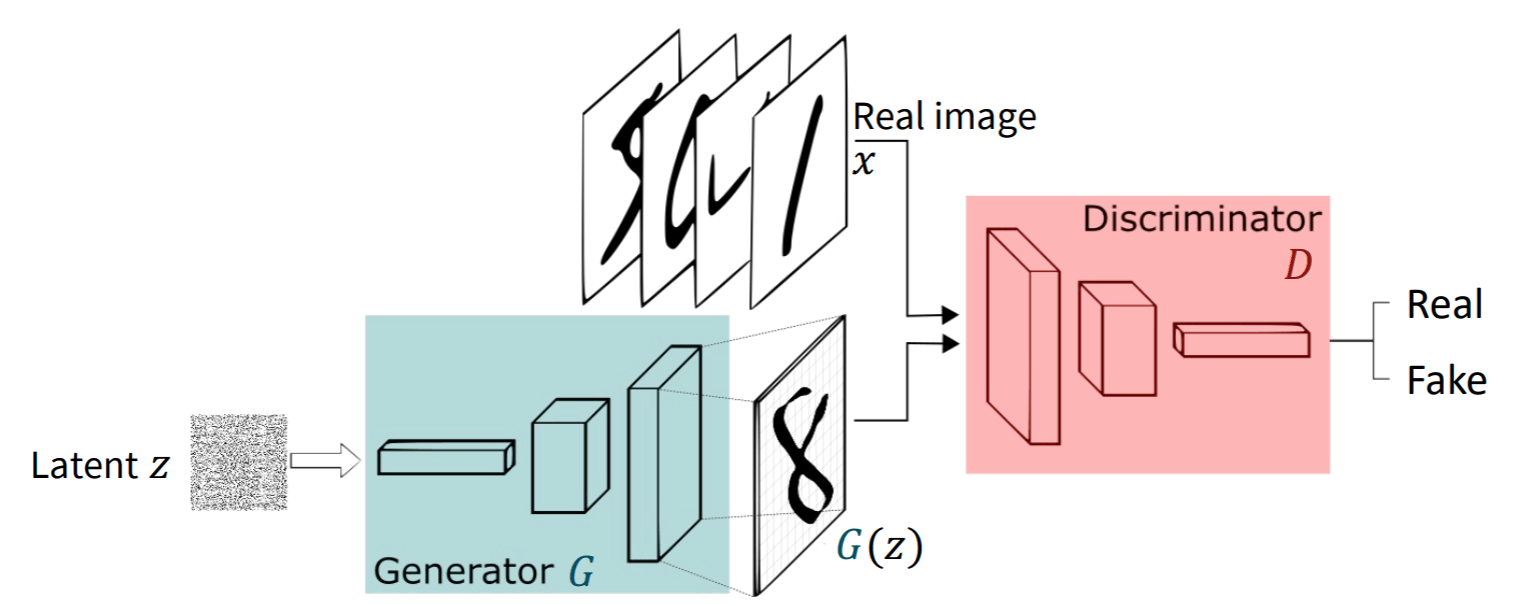

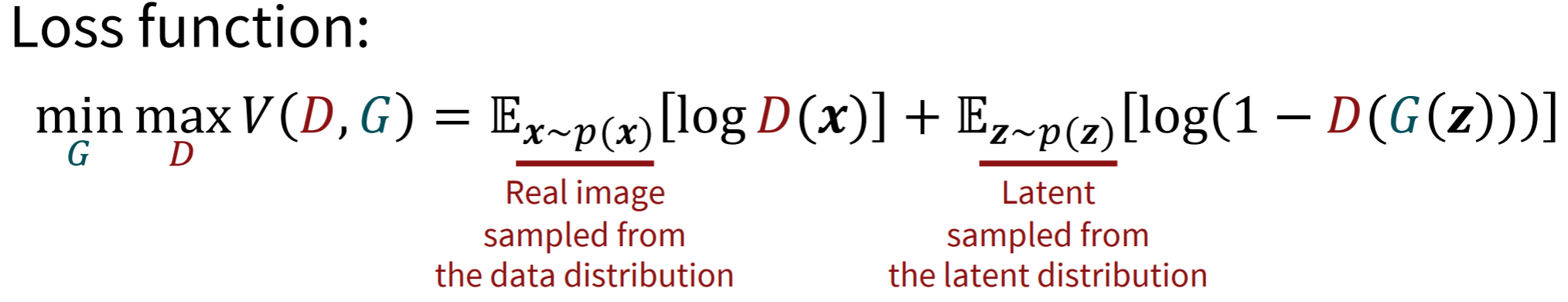

\text{GANs}

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

\text{GANs}

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

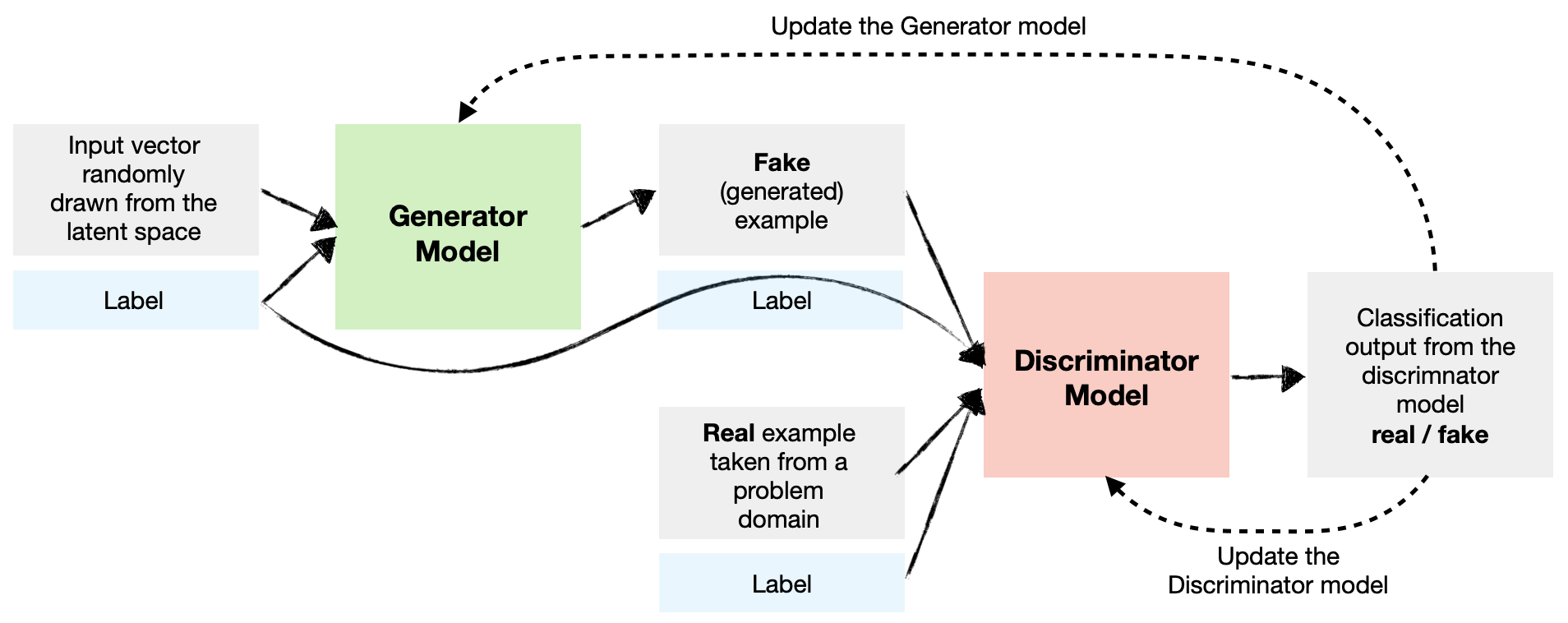

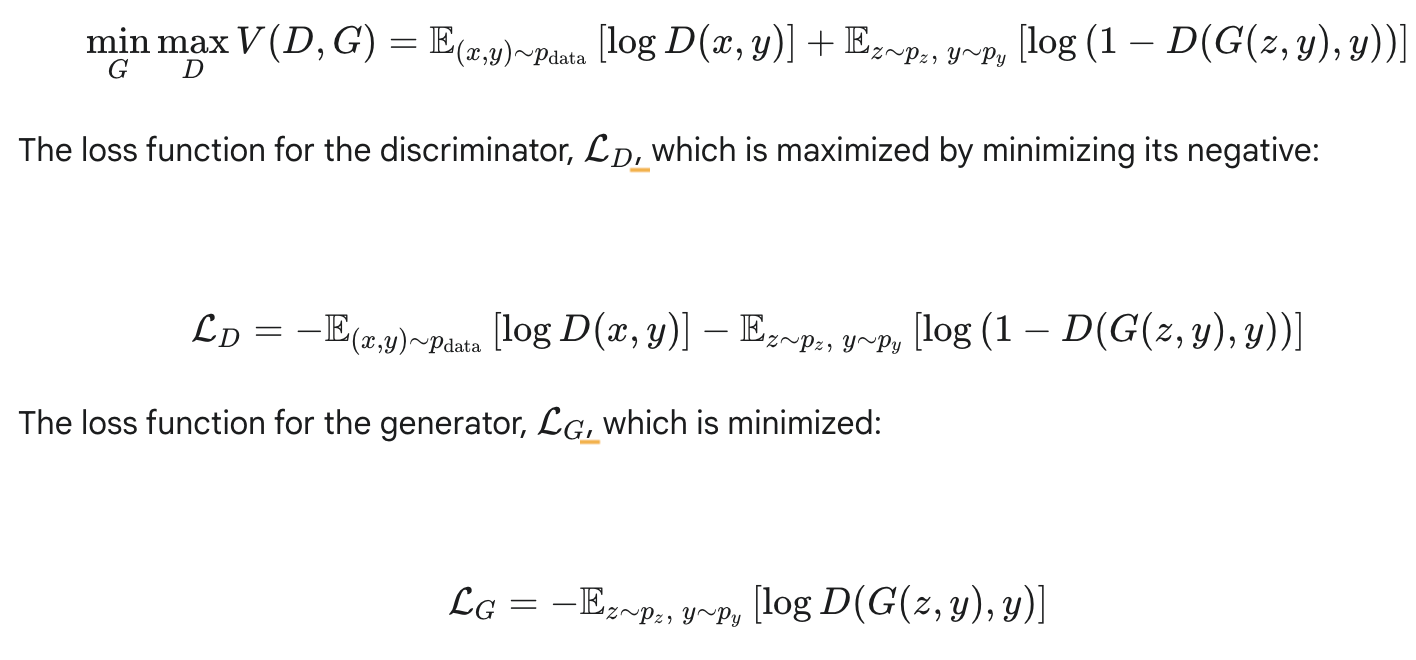

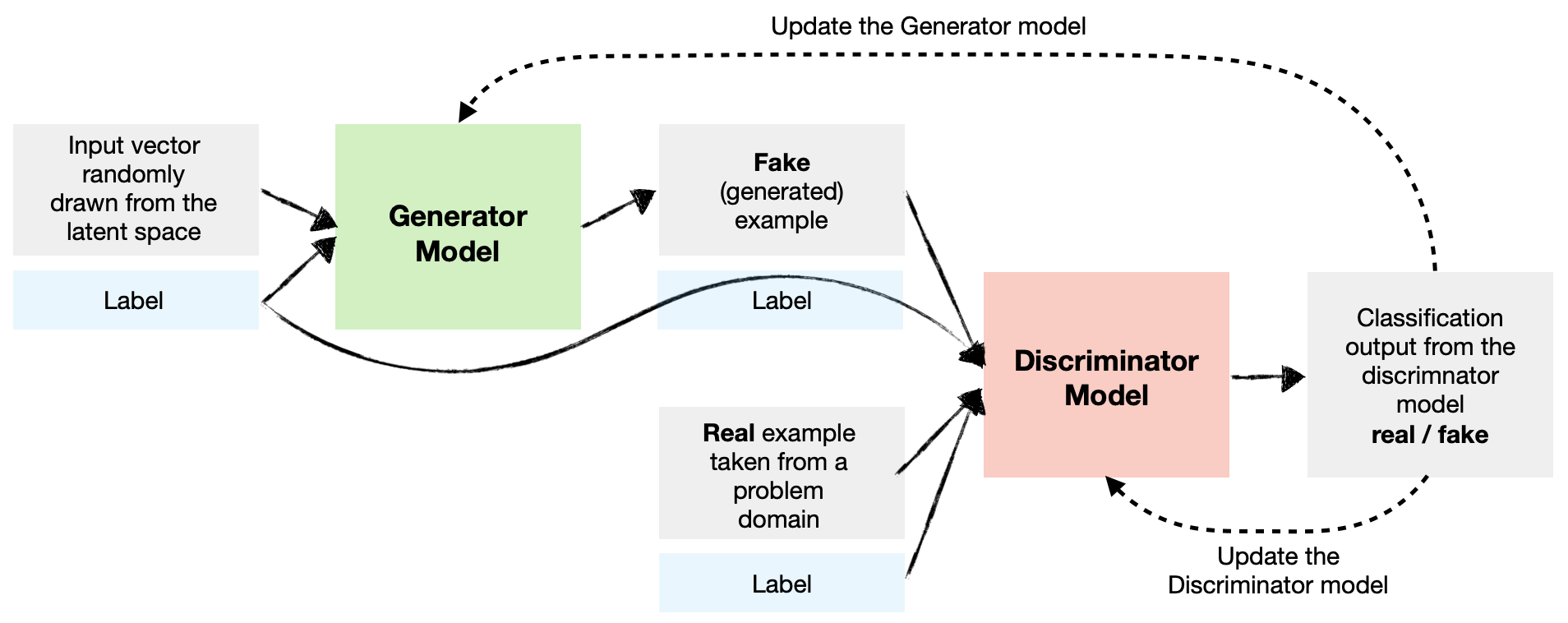

\text{Conditional GANs}

Training dynamics

1. Draw a batch of real images \( x_i \) with labels \( y_i \).

2. Sample noise \( z_i \) and random labels \( y_i \).

3. Update Discriminator on real \( (x_i,y_i) \) vs. fake \( (G(z_i,y_i),y_i) \).

4. Update Generator to fool \( D\bigl(G(z_i,y_i),y_i\bigr) \) into thinking those fakes are real.

\text{Naresh Kumar Devulapally}

\text{CSE 4/573: CVIP, Summer 2025}

\text{July 10, 2025}

\text{Conditional GANs}