Slides adapted from Dr. Chen Wang CSE 573 lecture slides

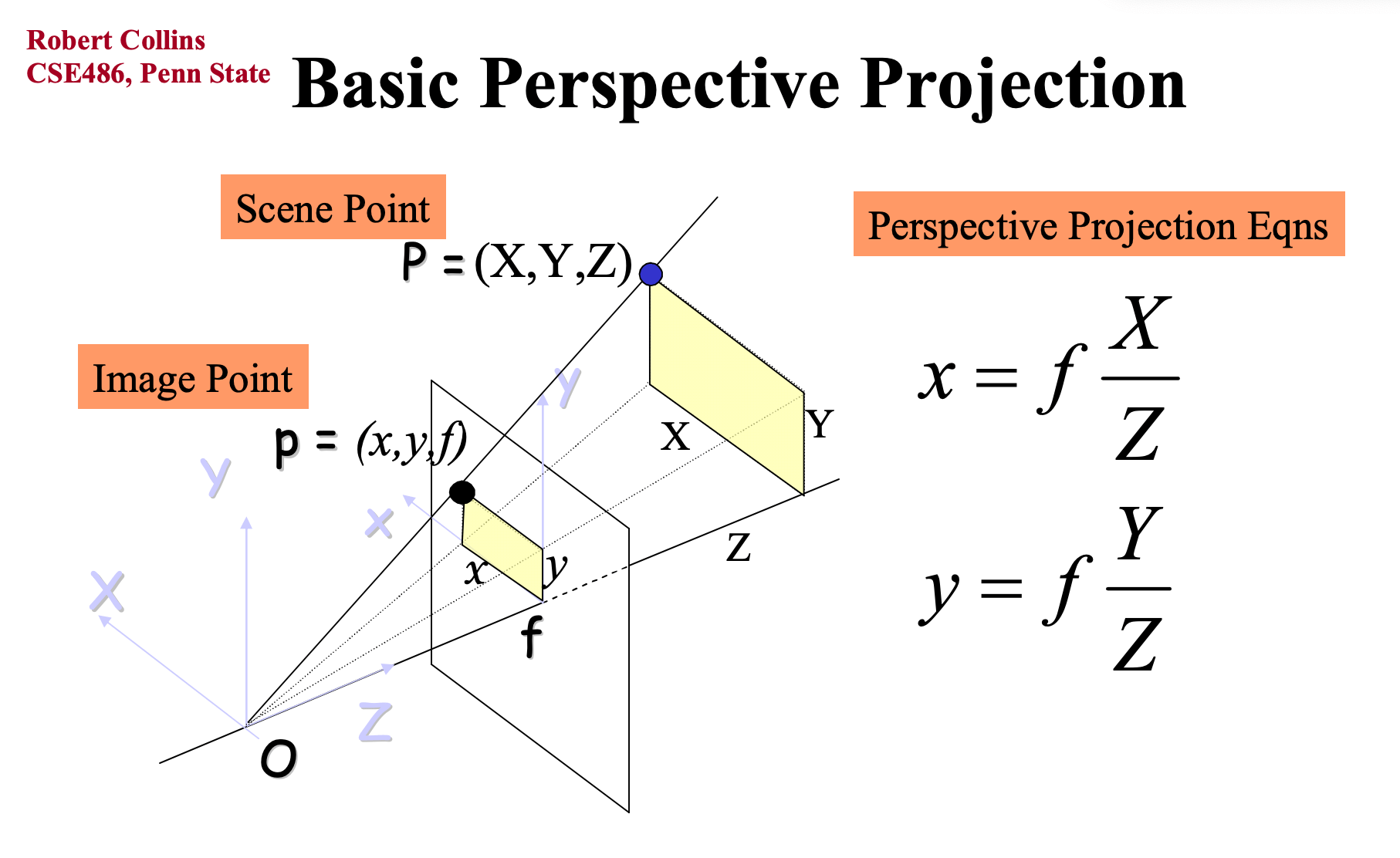

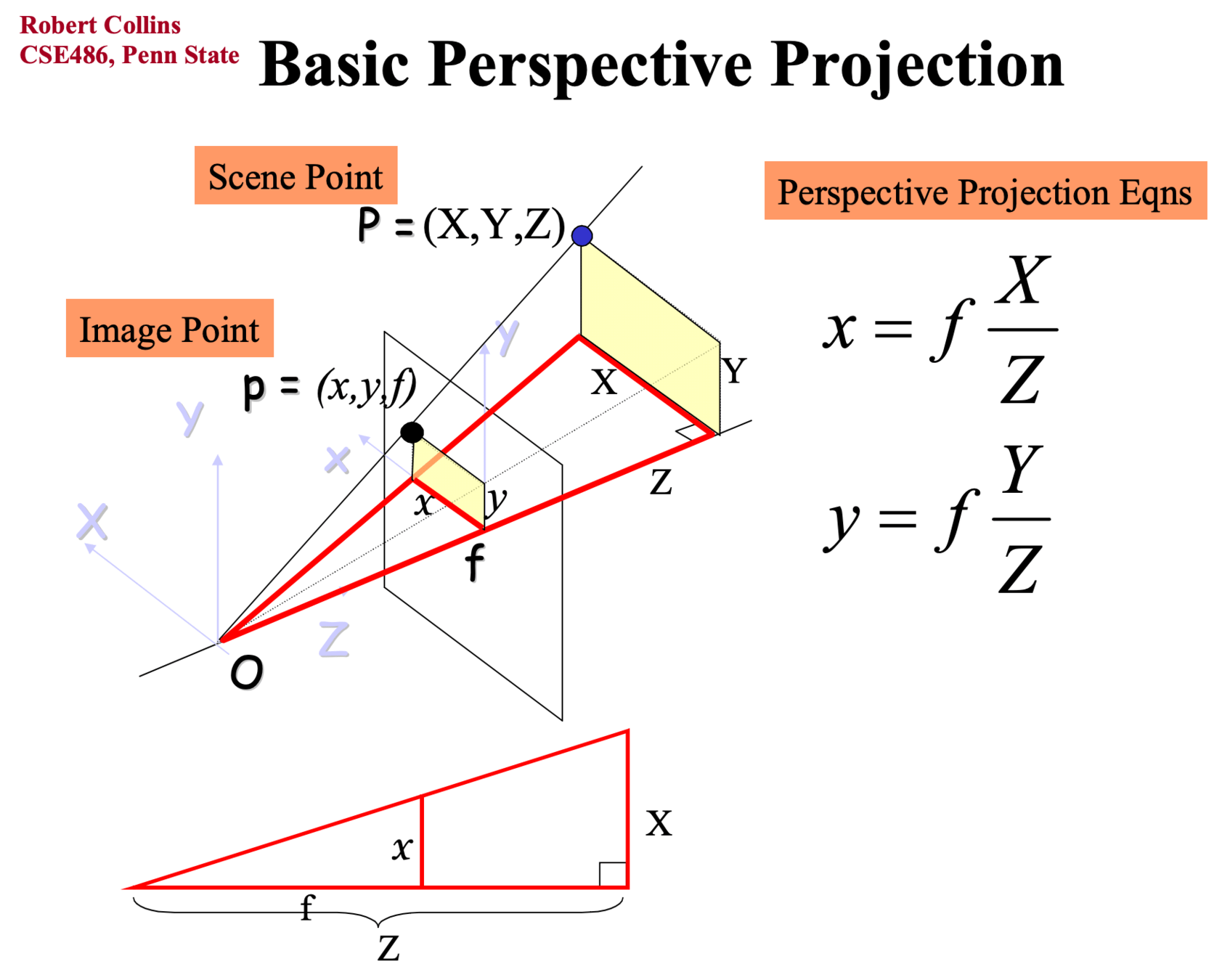

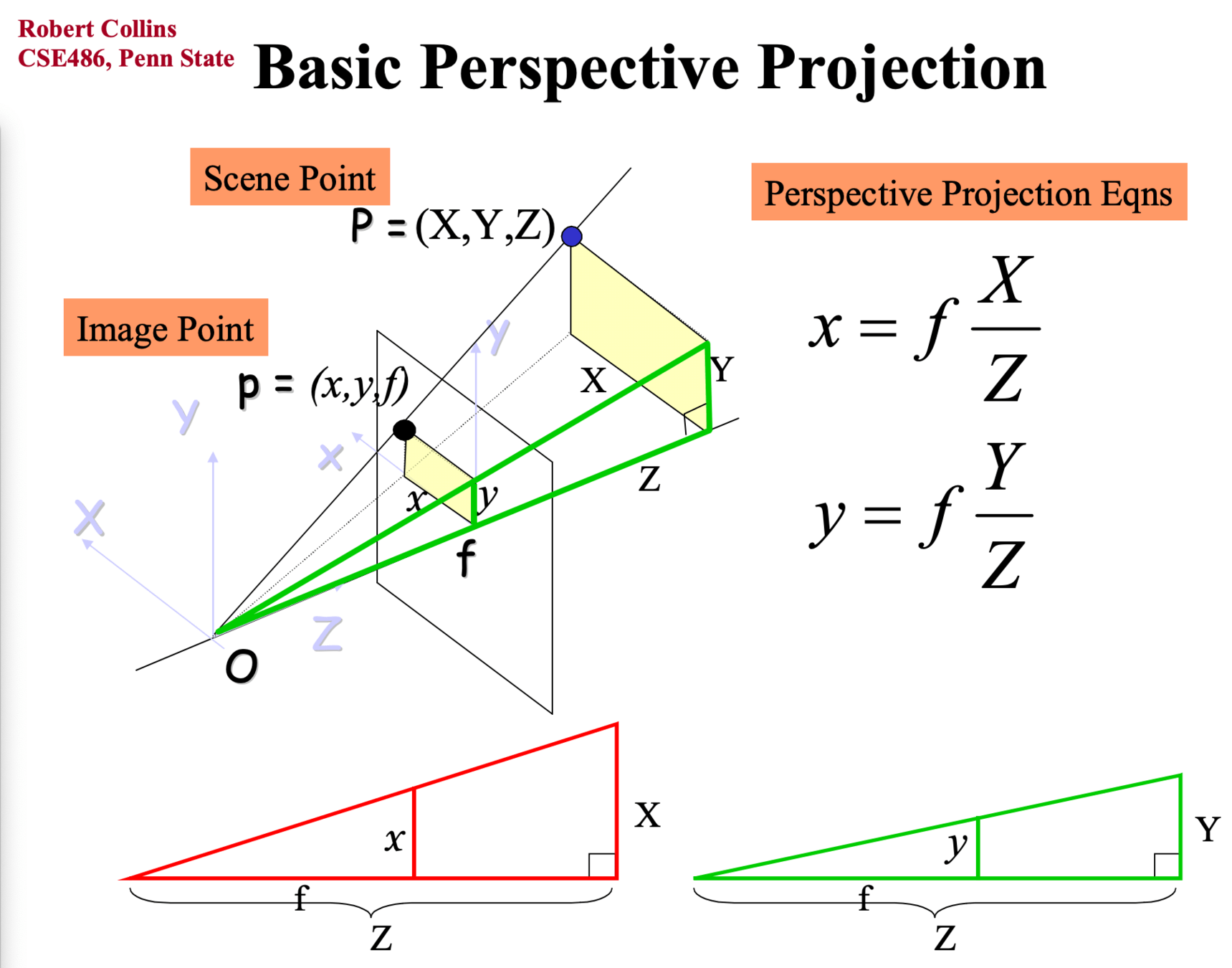

- Recap of Perspective Projection

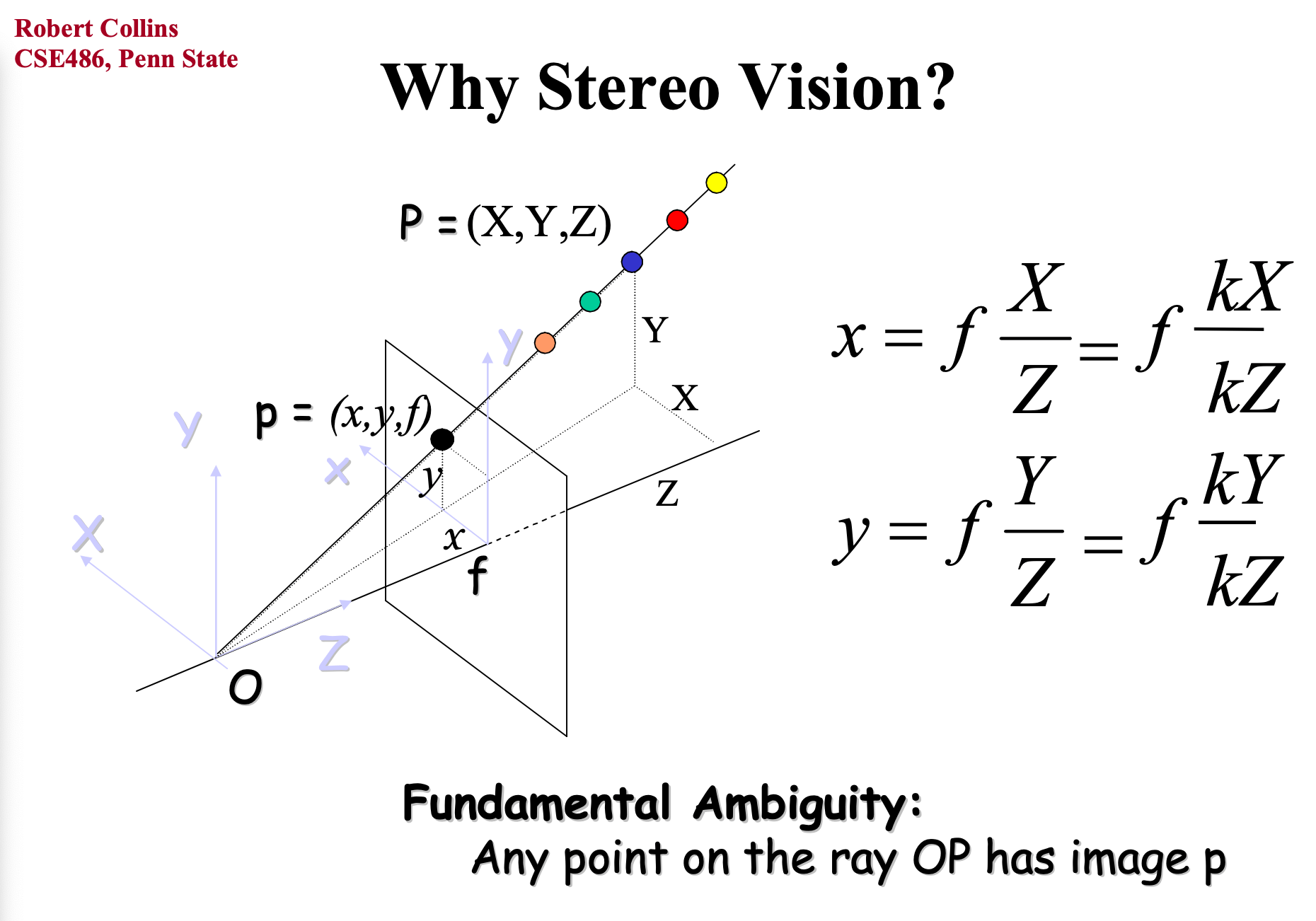

- Introduction to Stereo Vision

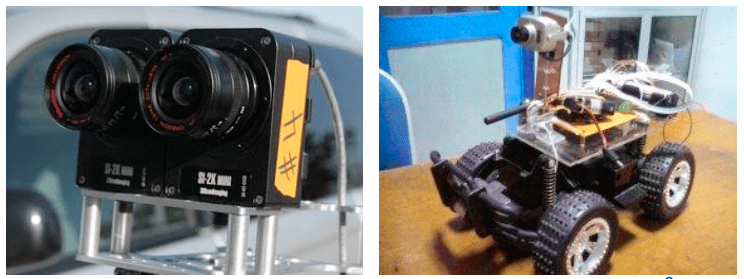

- Example Projects for Depth Estimation

\( \text{Agenda of this Lecture:}\)

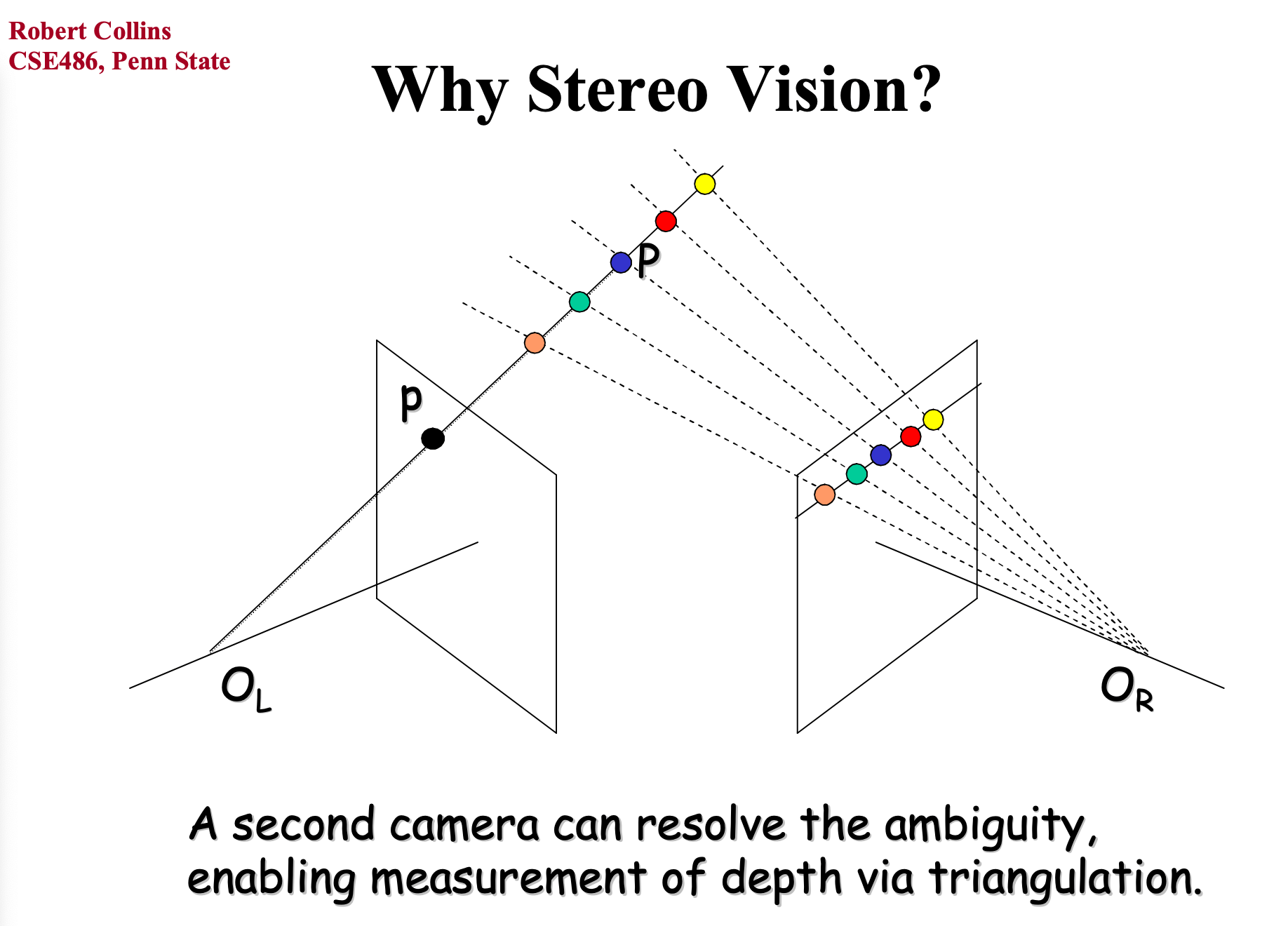

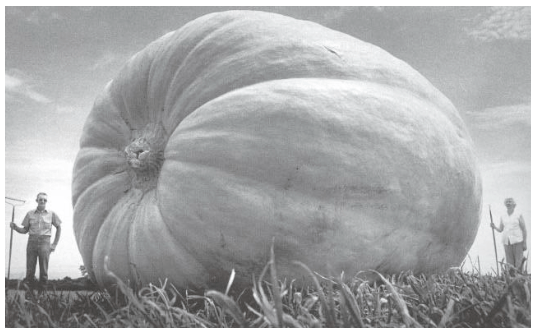

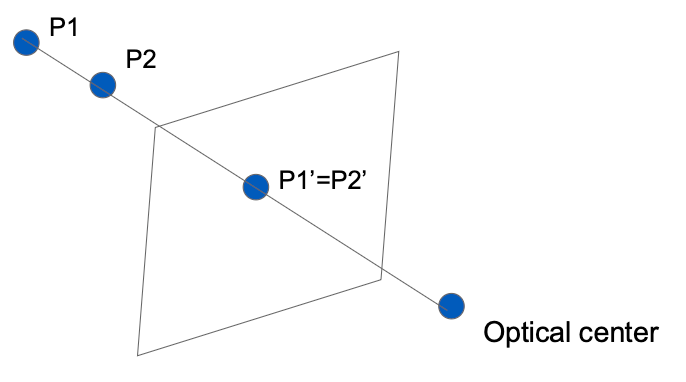

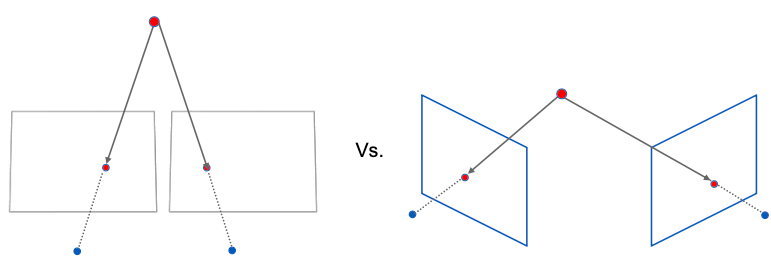

Structure and depth are inherently ambiguous from single views.

Structure and depth are inherently ambiguous from single views.

- Shading

- Focus/Defocus

- Texture

- Perspective

- Motion

- Occlusion

Prados & Faugeras, 2006

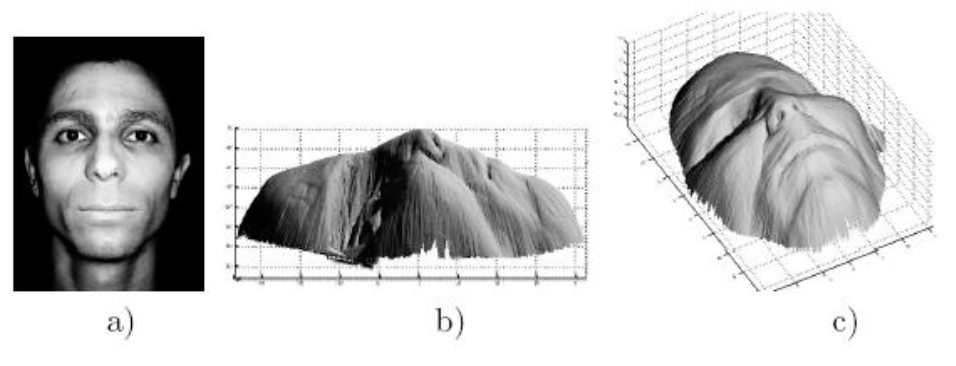

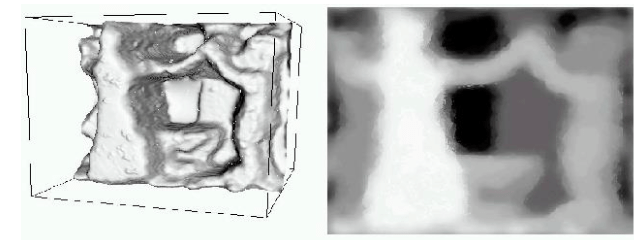

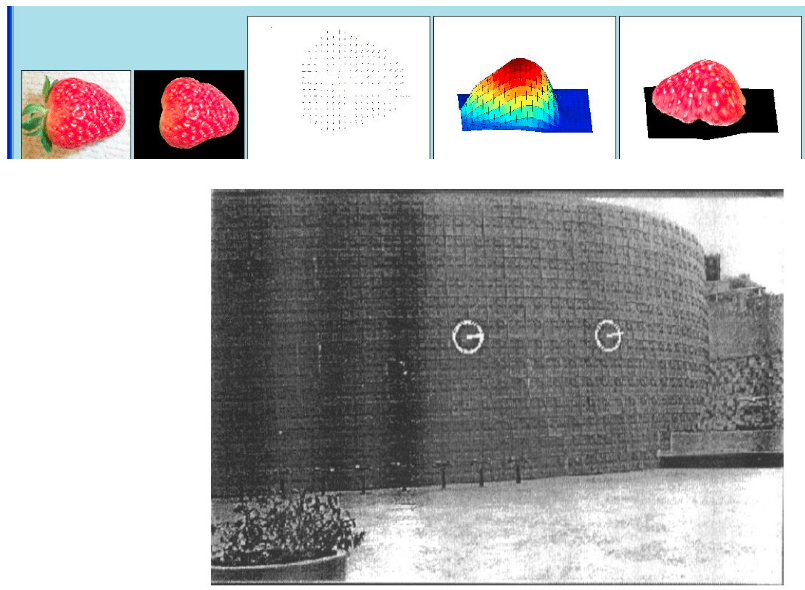

- Same point of view, different camera parameters

- 3D shape / depth estimation

H. Jin & P. Favaro, 2002

A.M. Loh

L. Zhang

Keunhong Park, Nerifies, 2021

http://www.johnsonshawmuseum.org

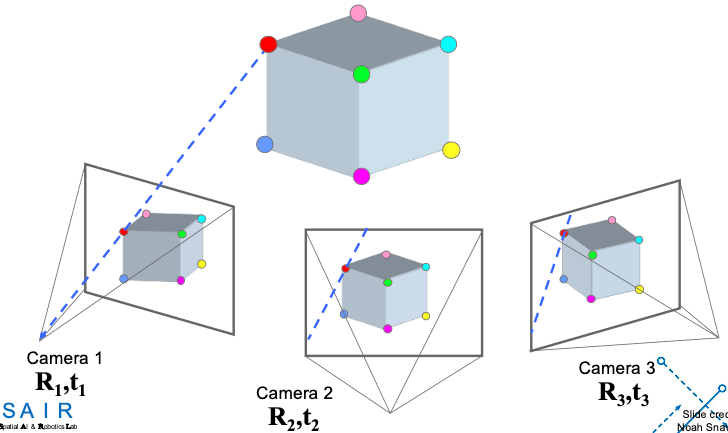

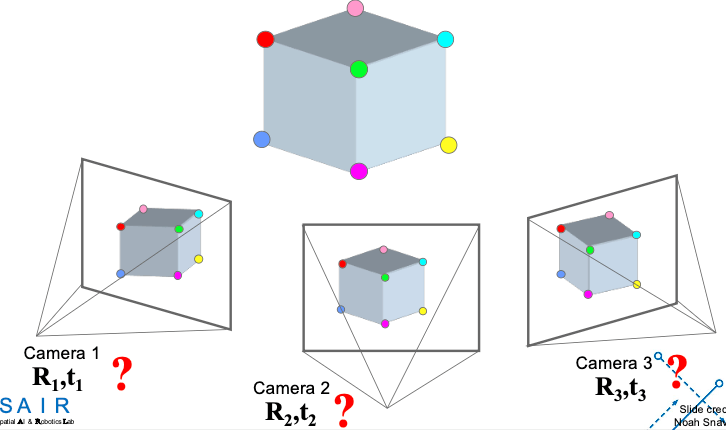

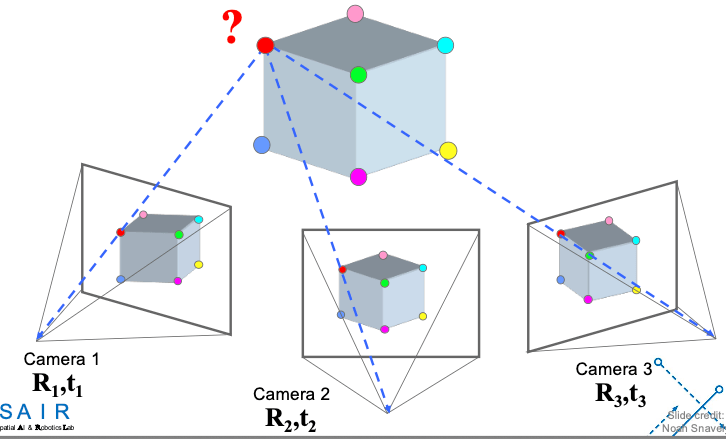

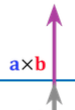

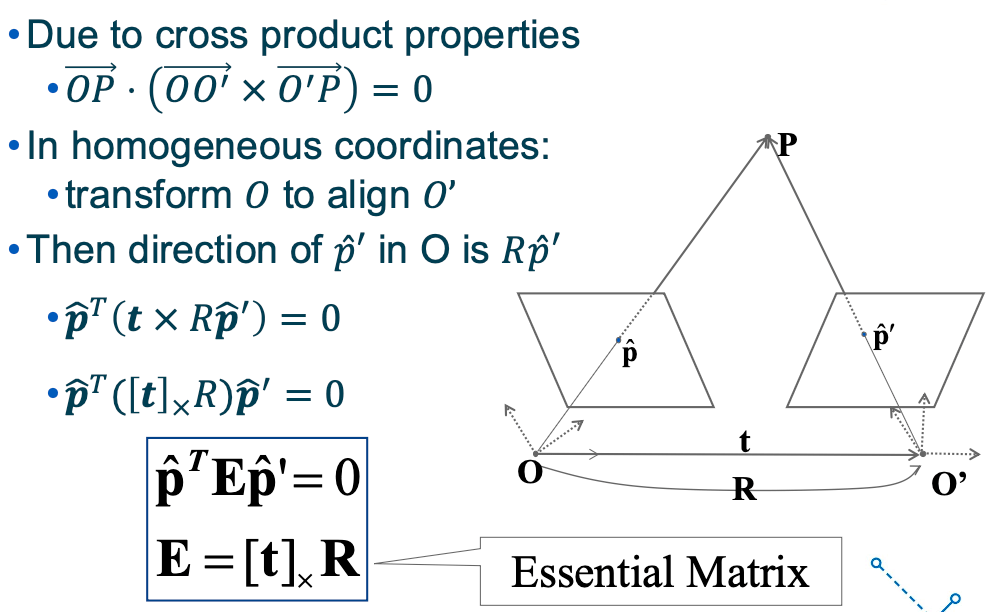

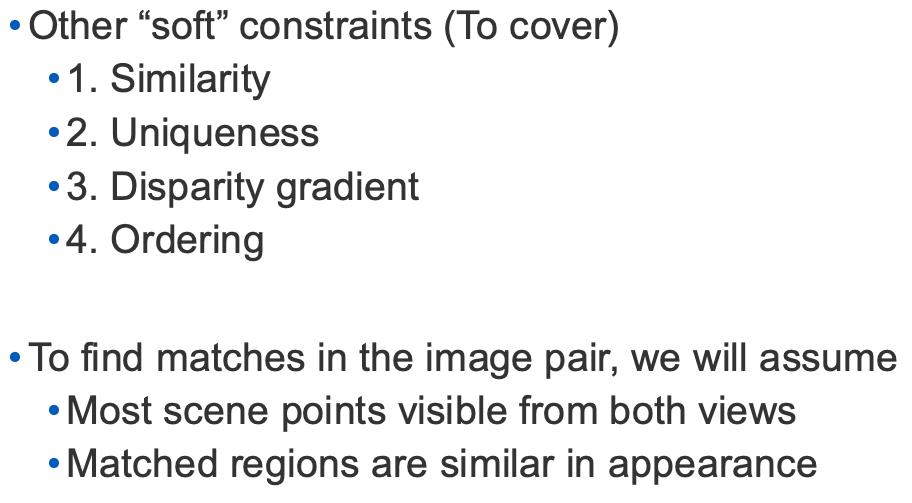

We will need to consider:

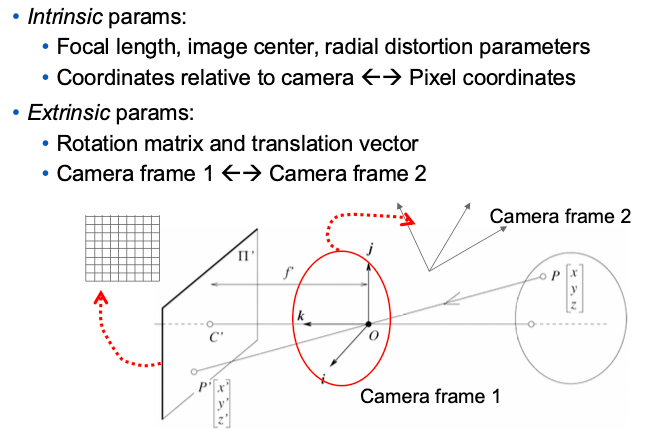

- Info on camera pose ("Calibration")

- Image point correspondences (feature detection/matching)

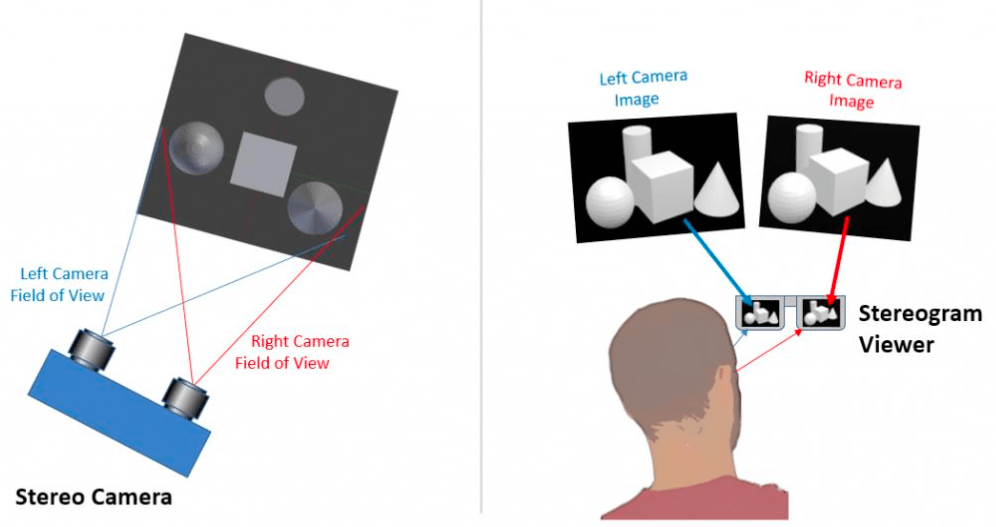

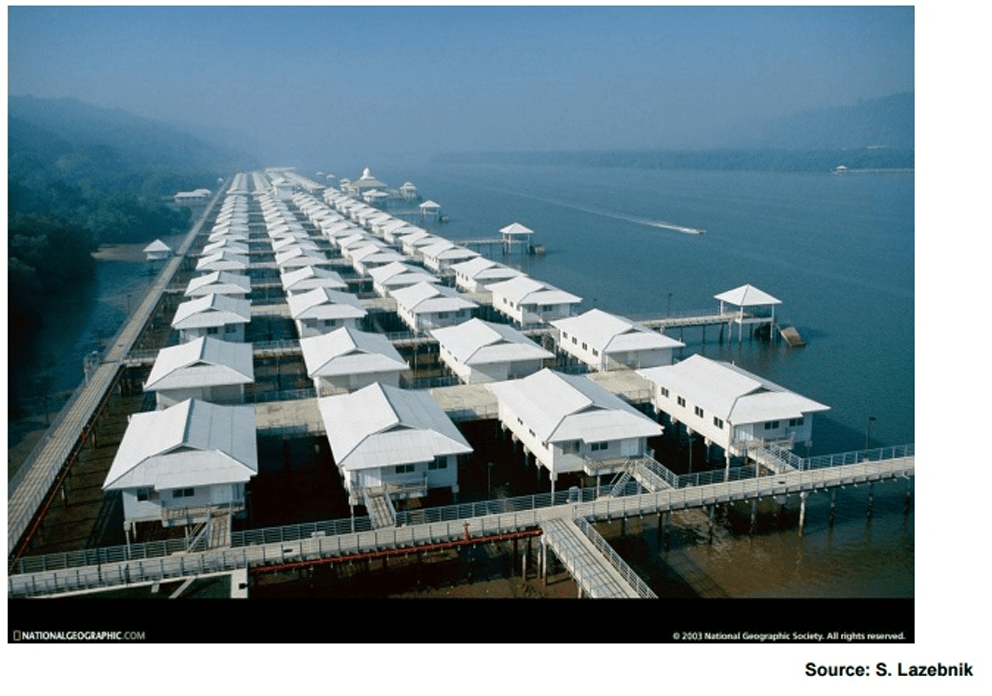

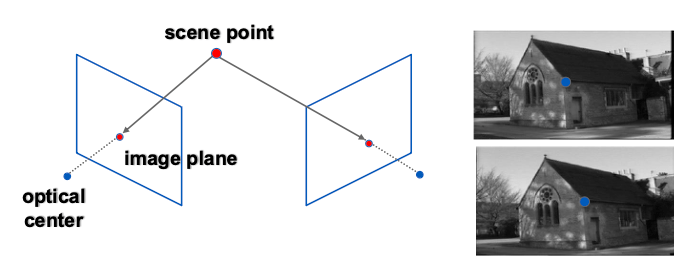

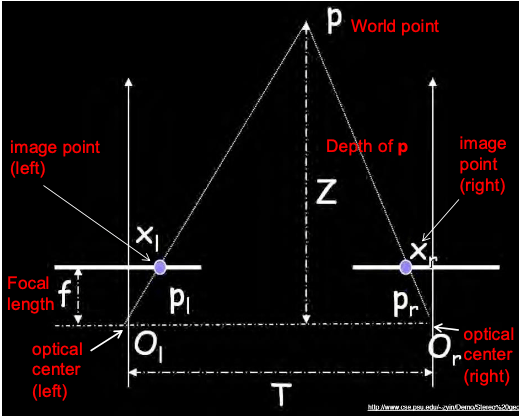

Two cameras, simultaneous views

Single camera moving in a static scene

Structure from Motion:

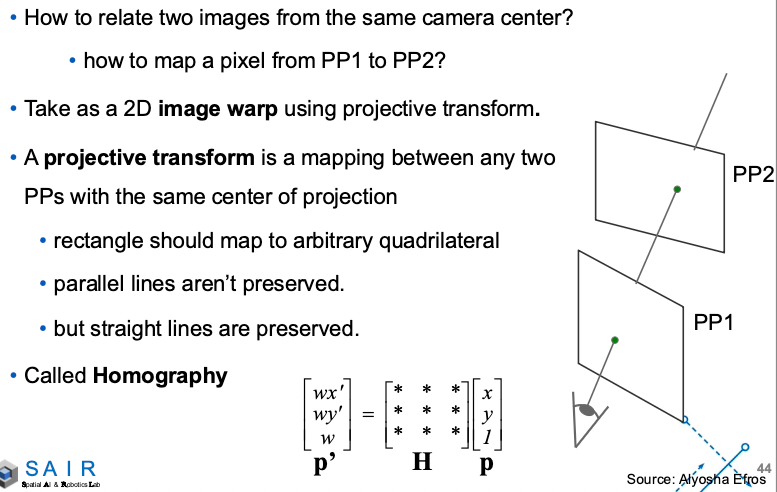

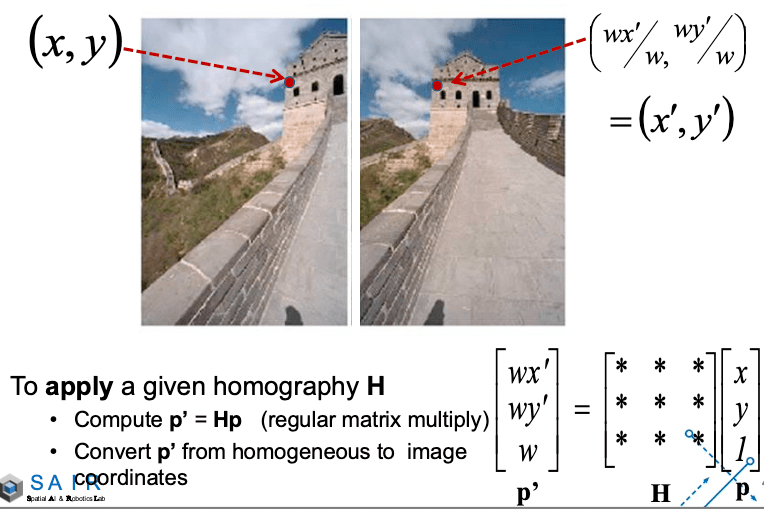

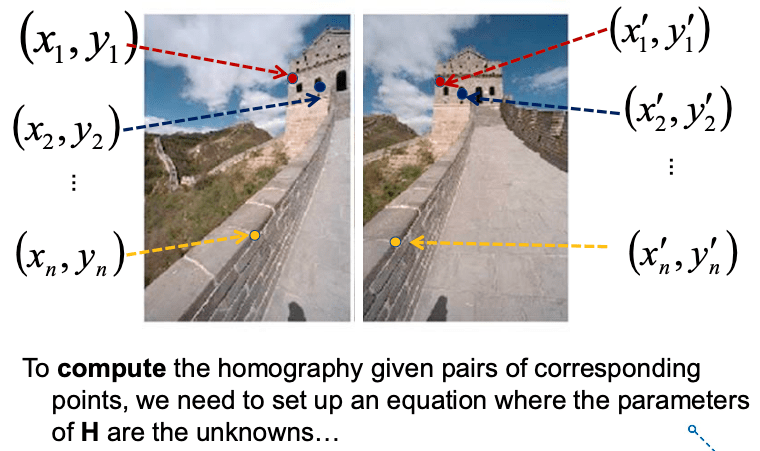

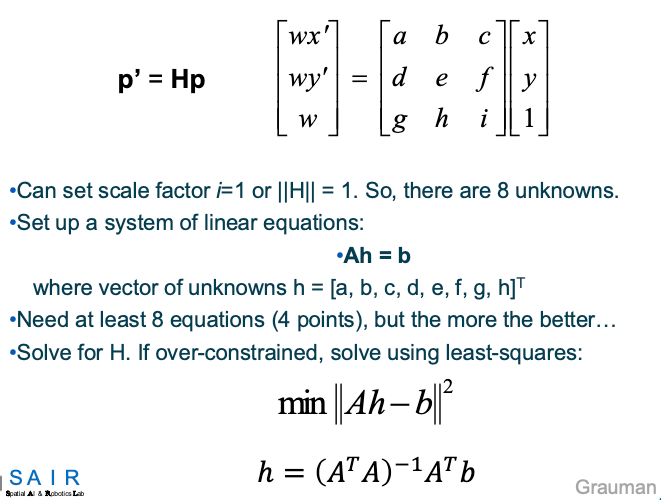

- Shape from "motion" between two images.

- Infer 3D shape of scene from two (multiple) images from different viewponts.

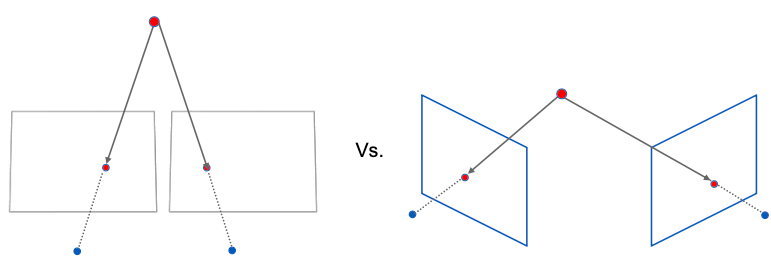

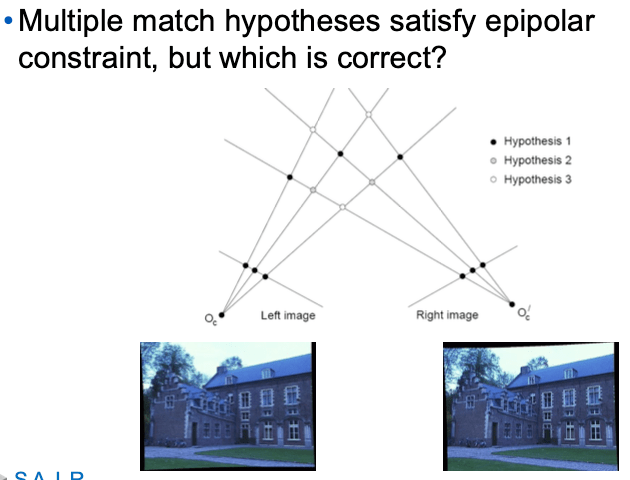

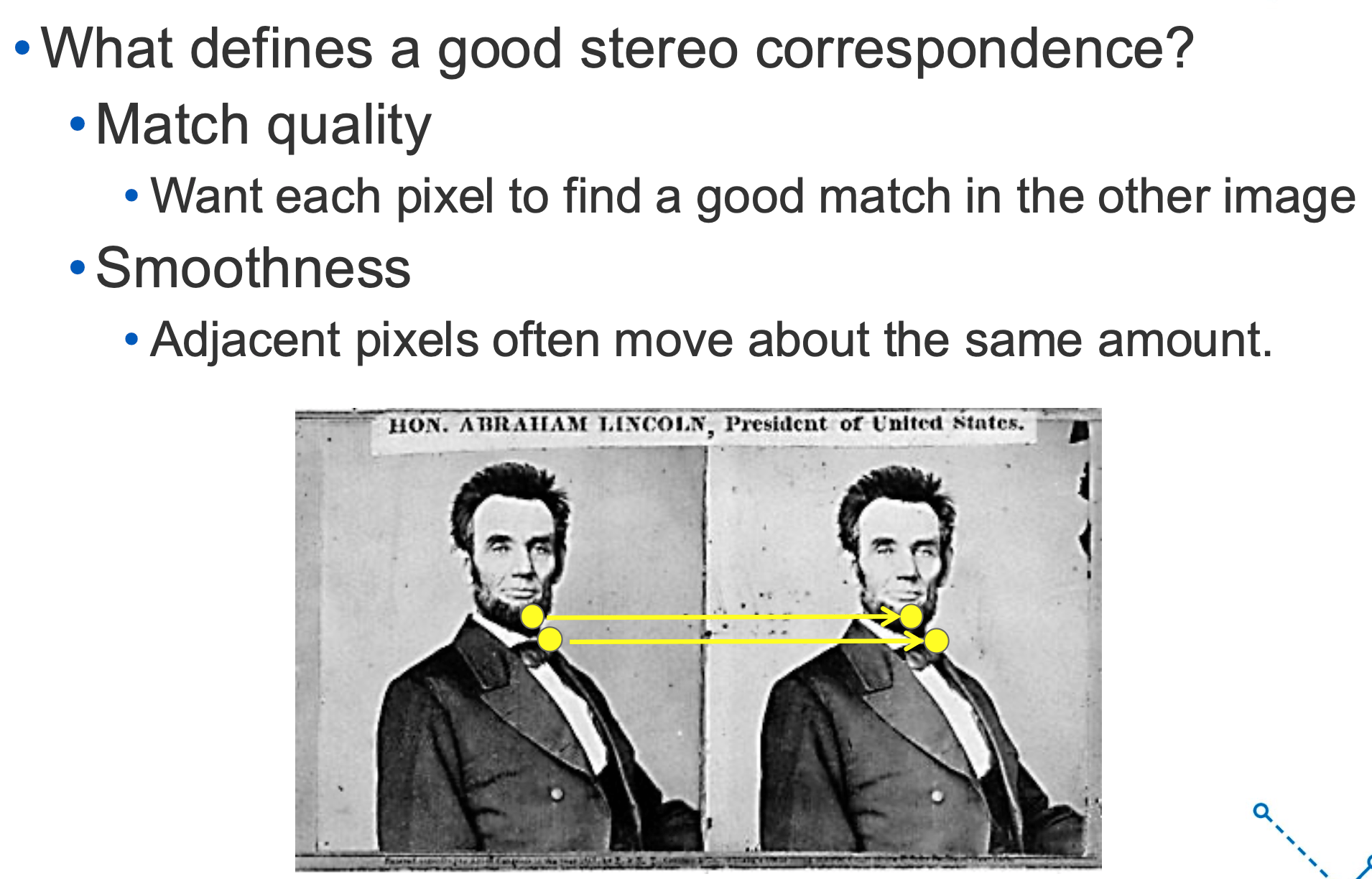

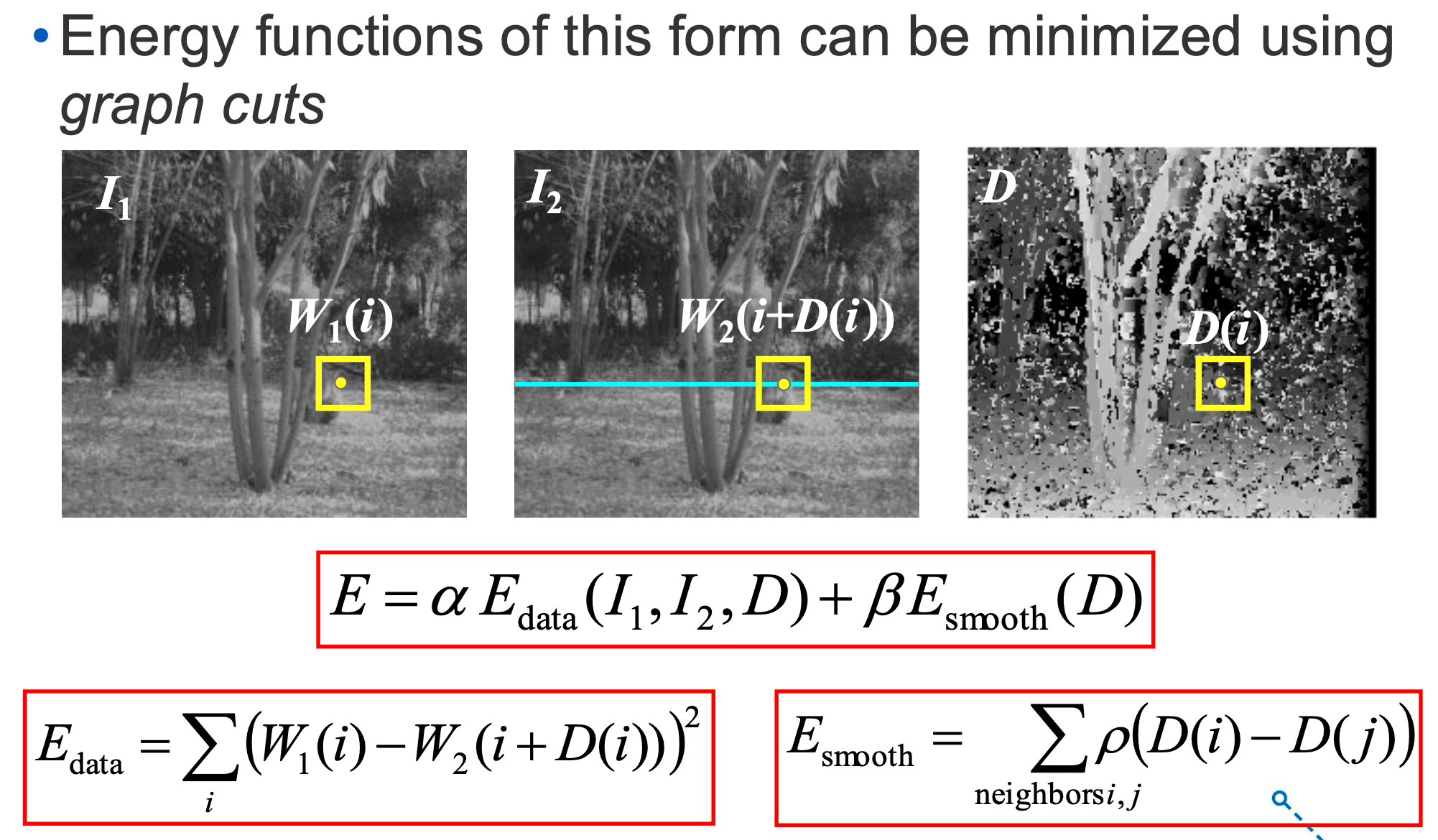

Stereo Correspondence:

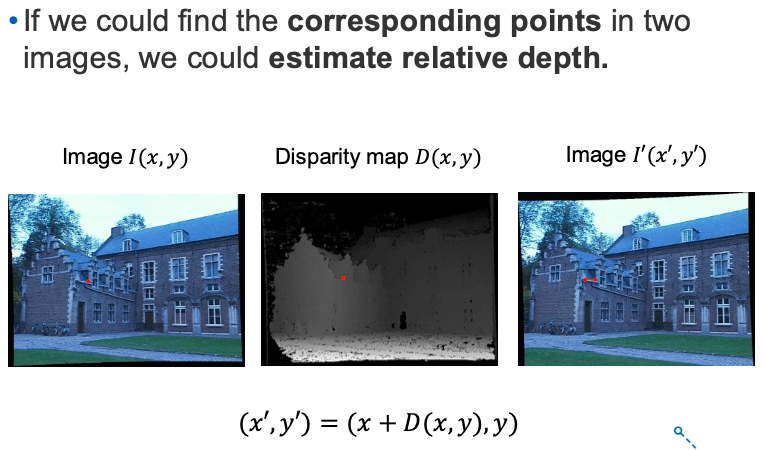

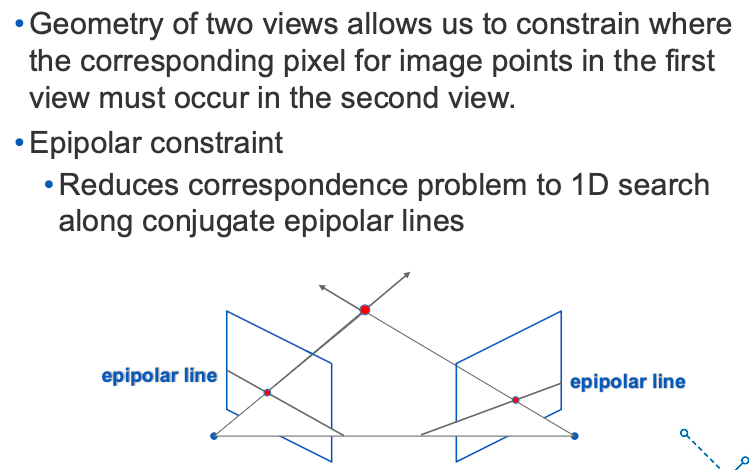

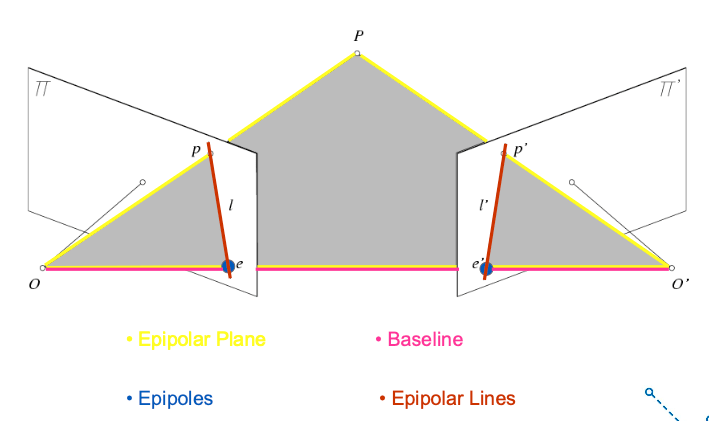

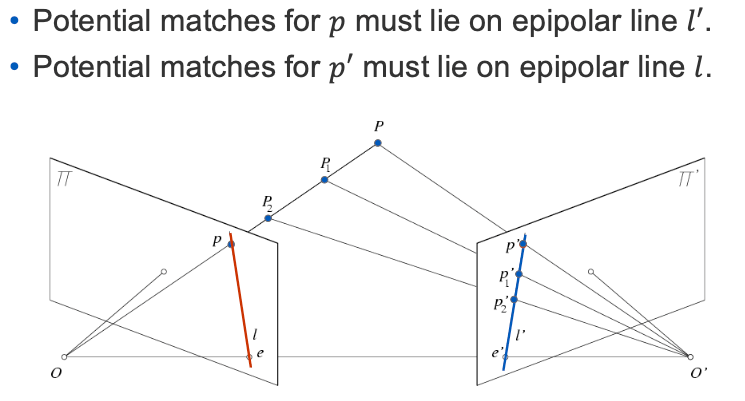

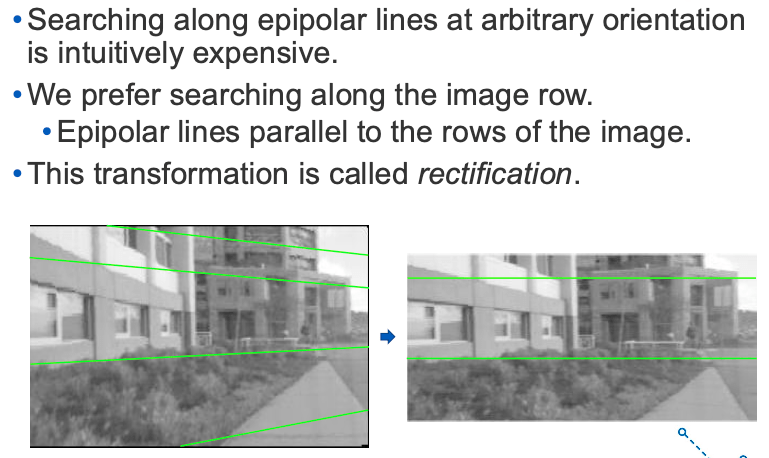

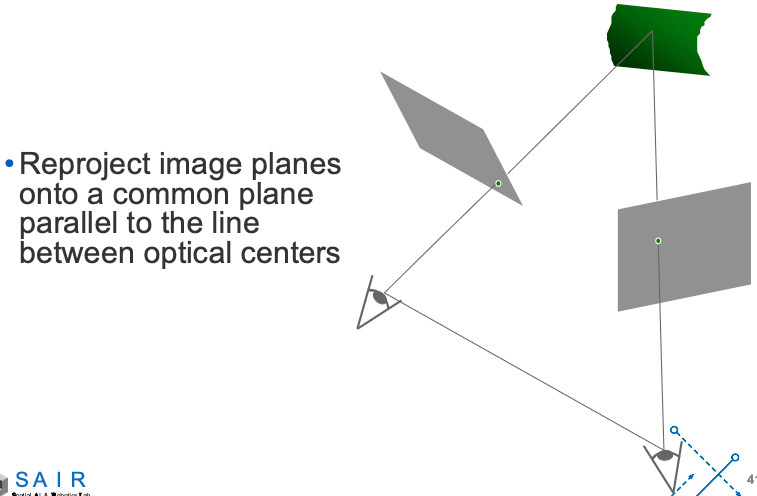

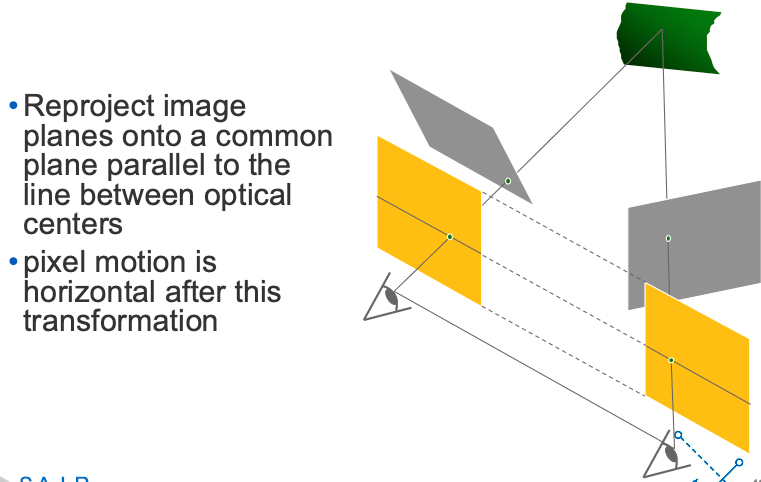

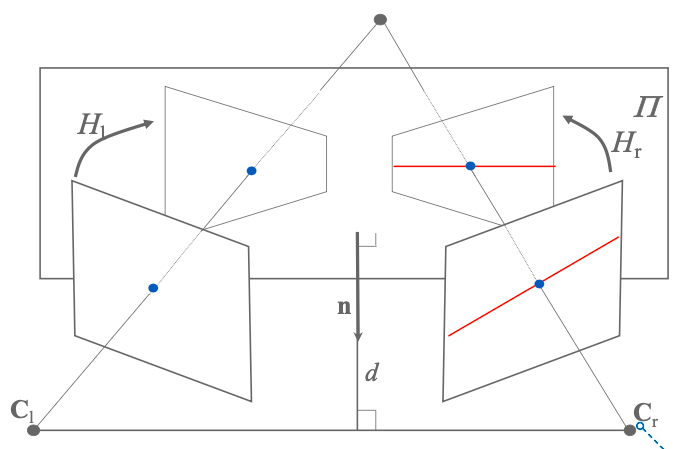

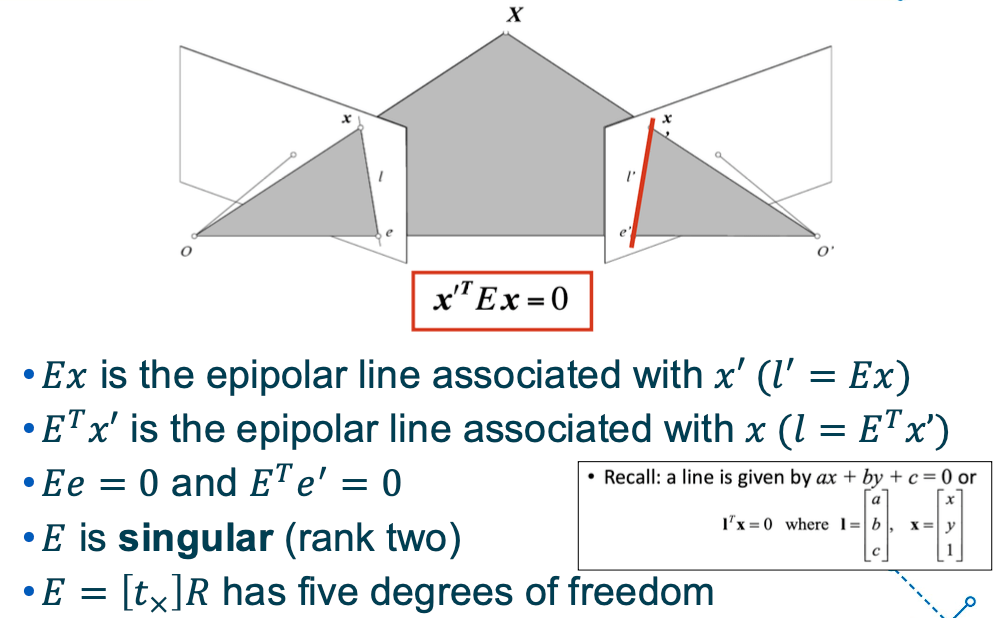

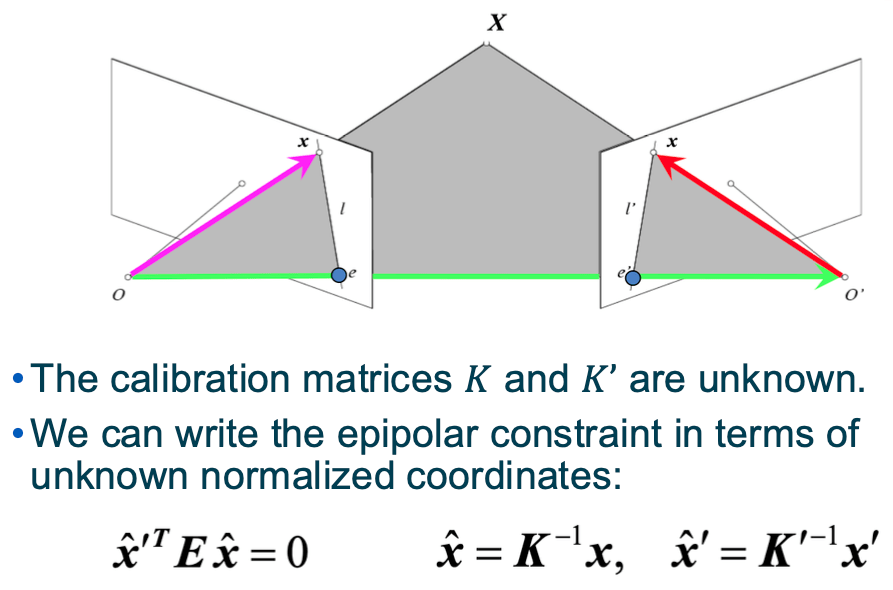

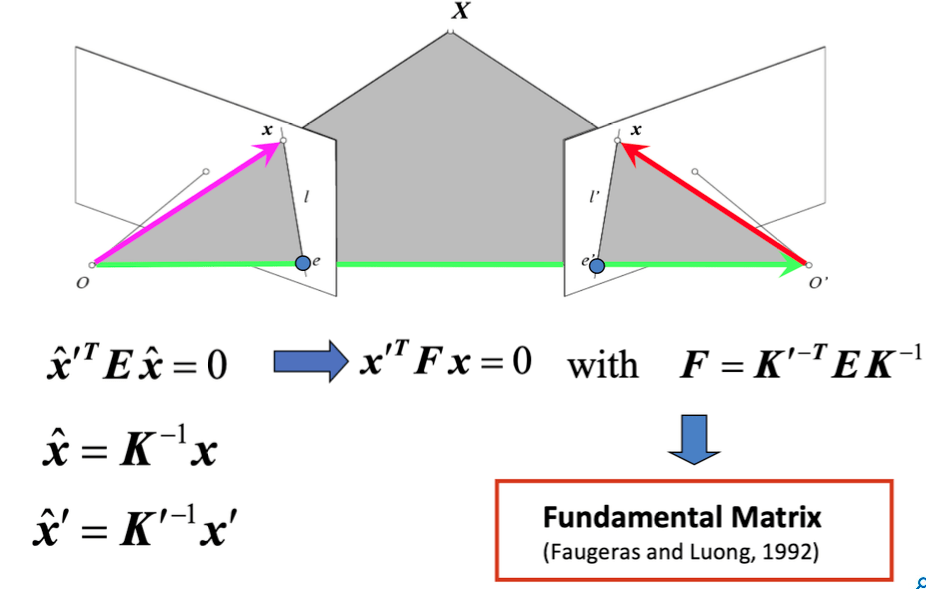

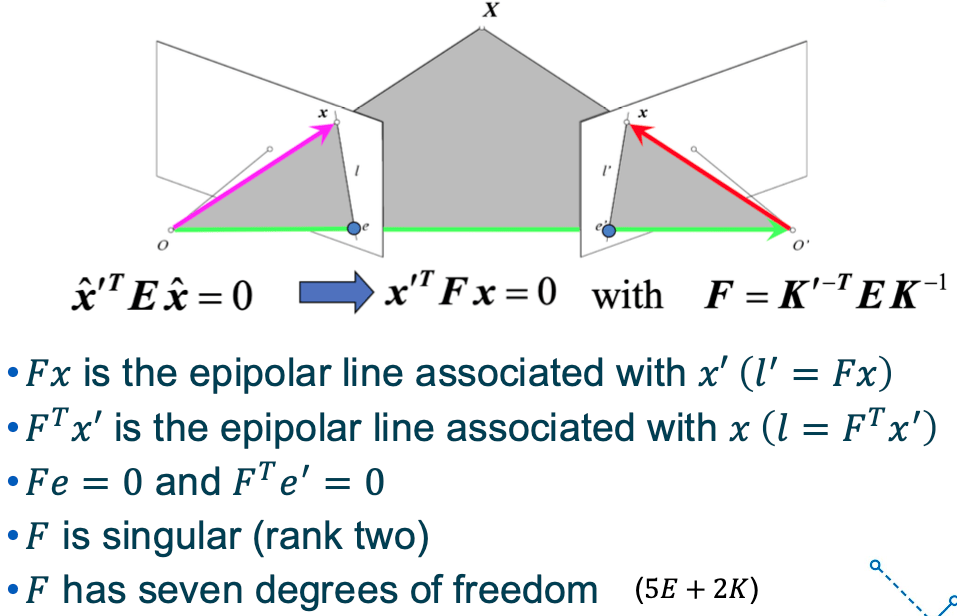

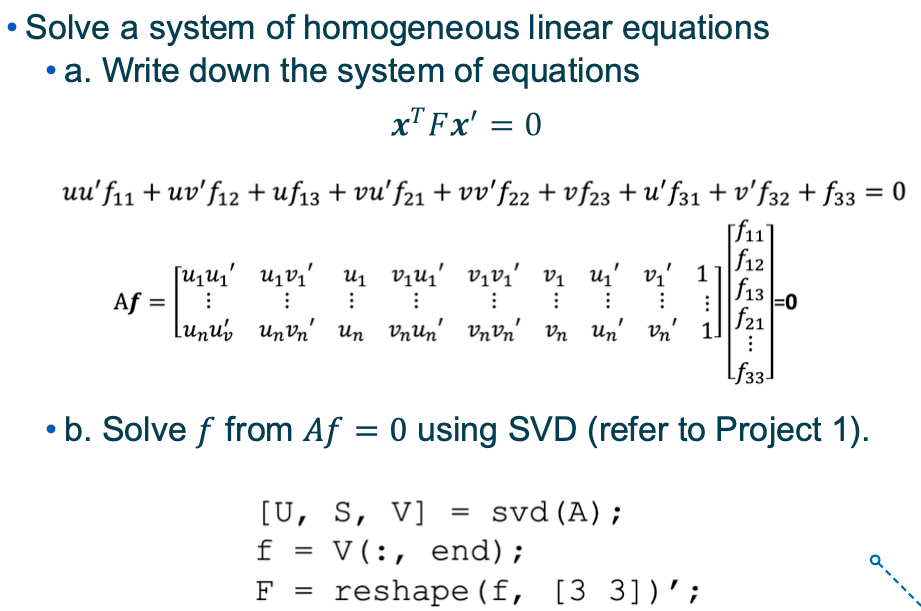

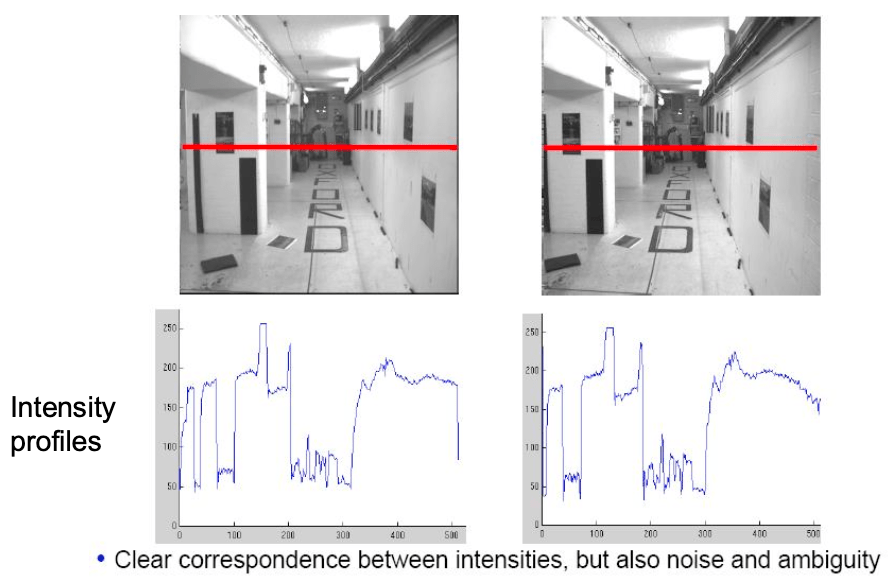

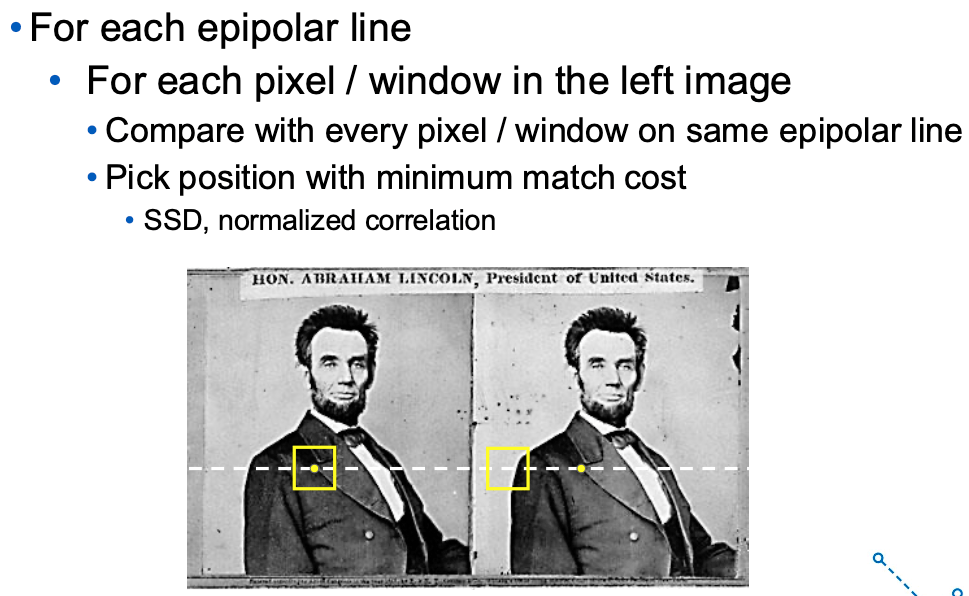

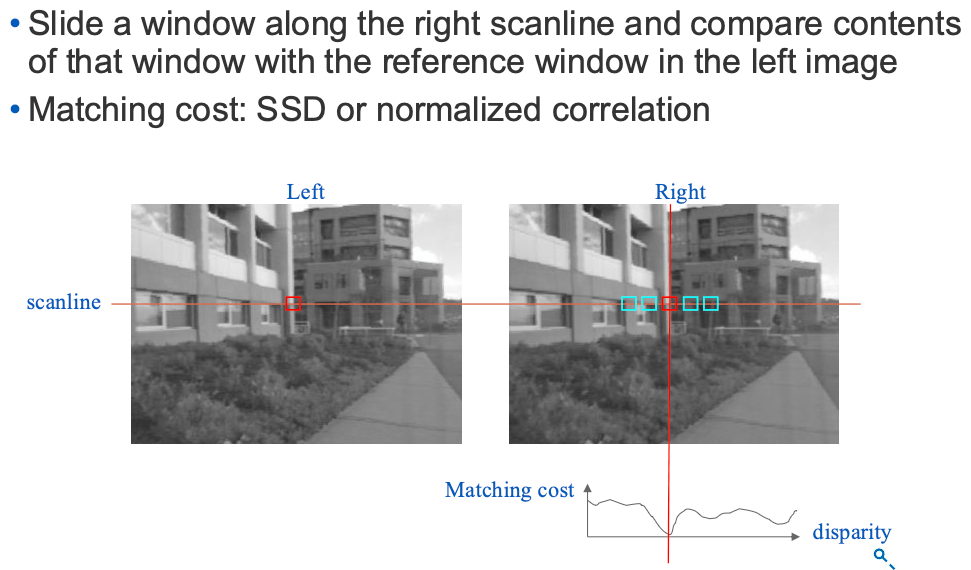

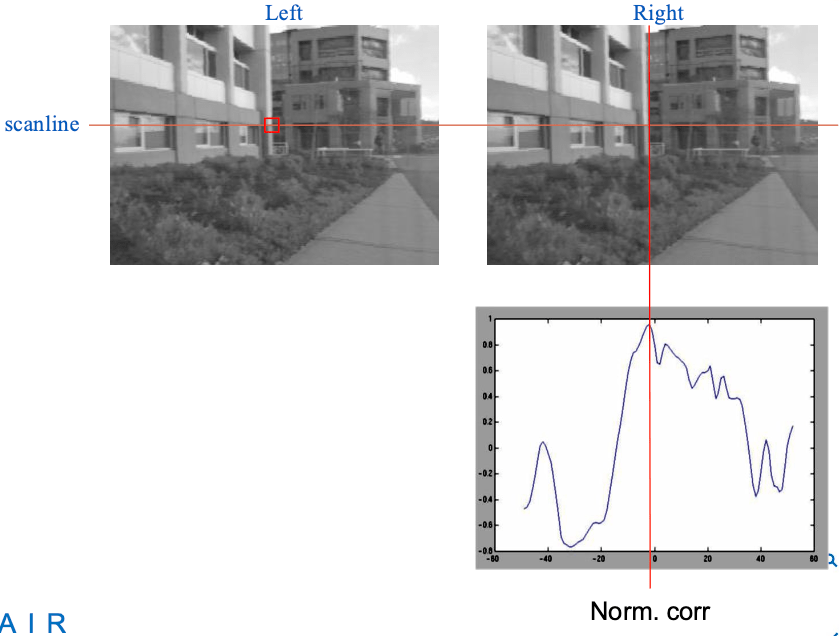

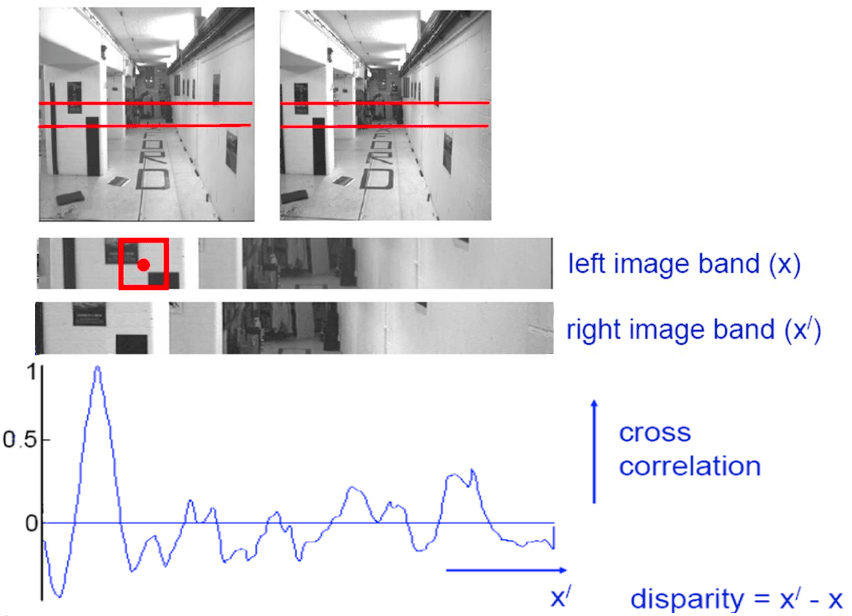

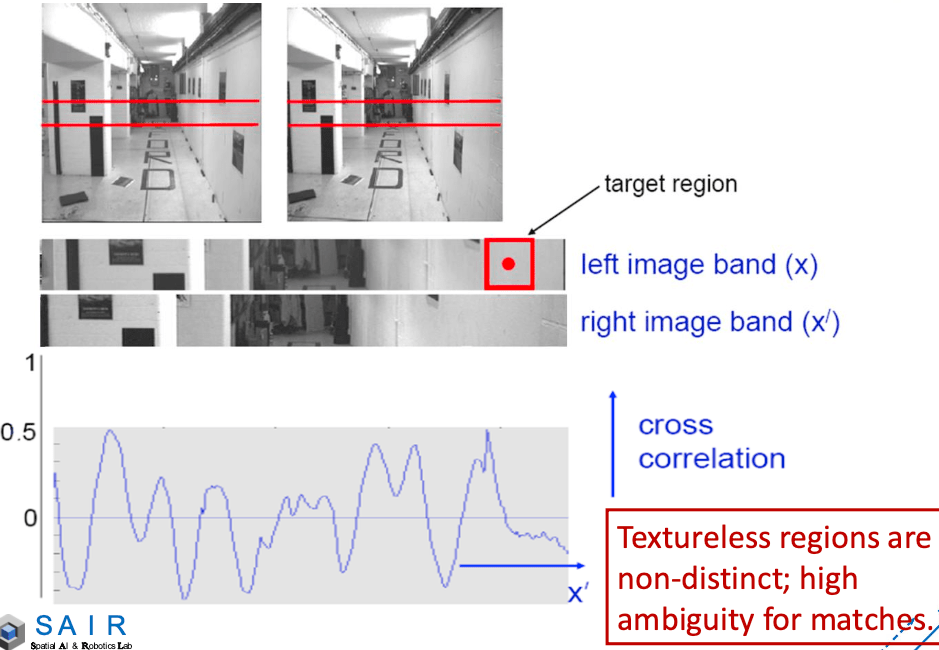

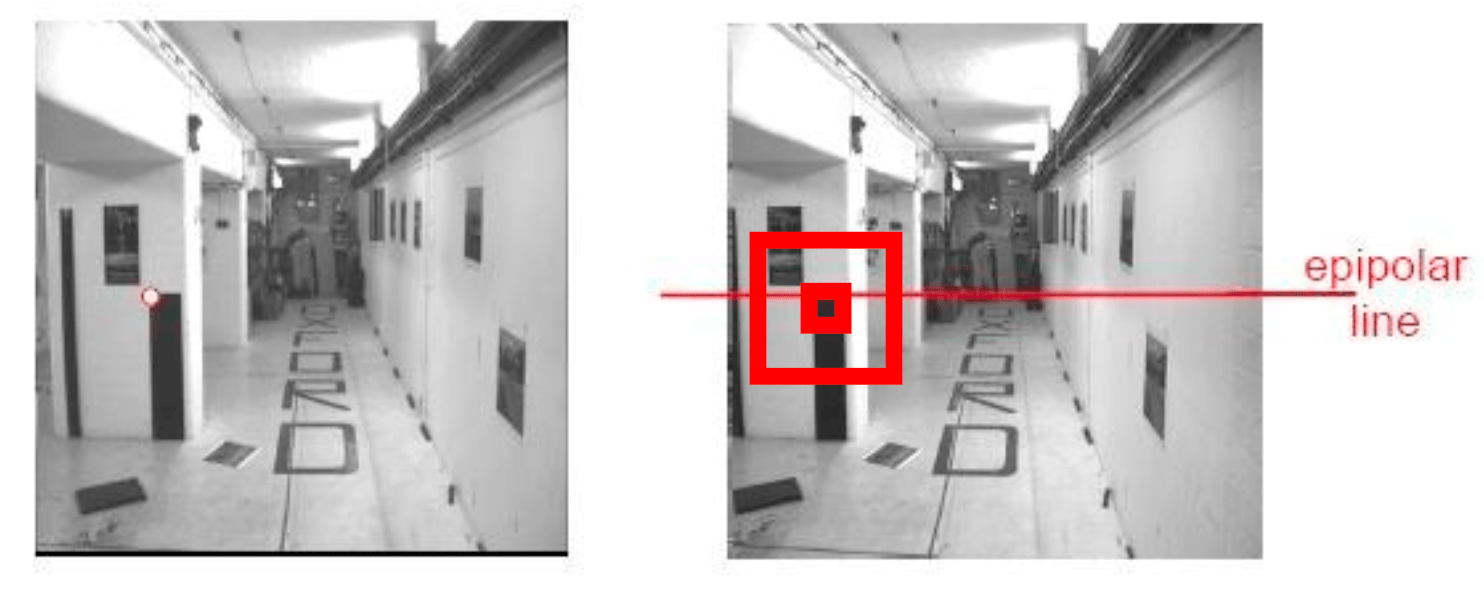

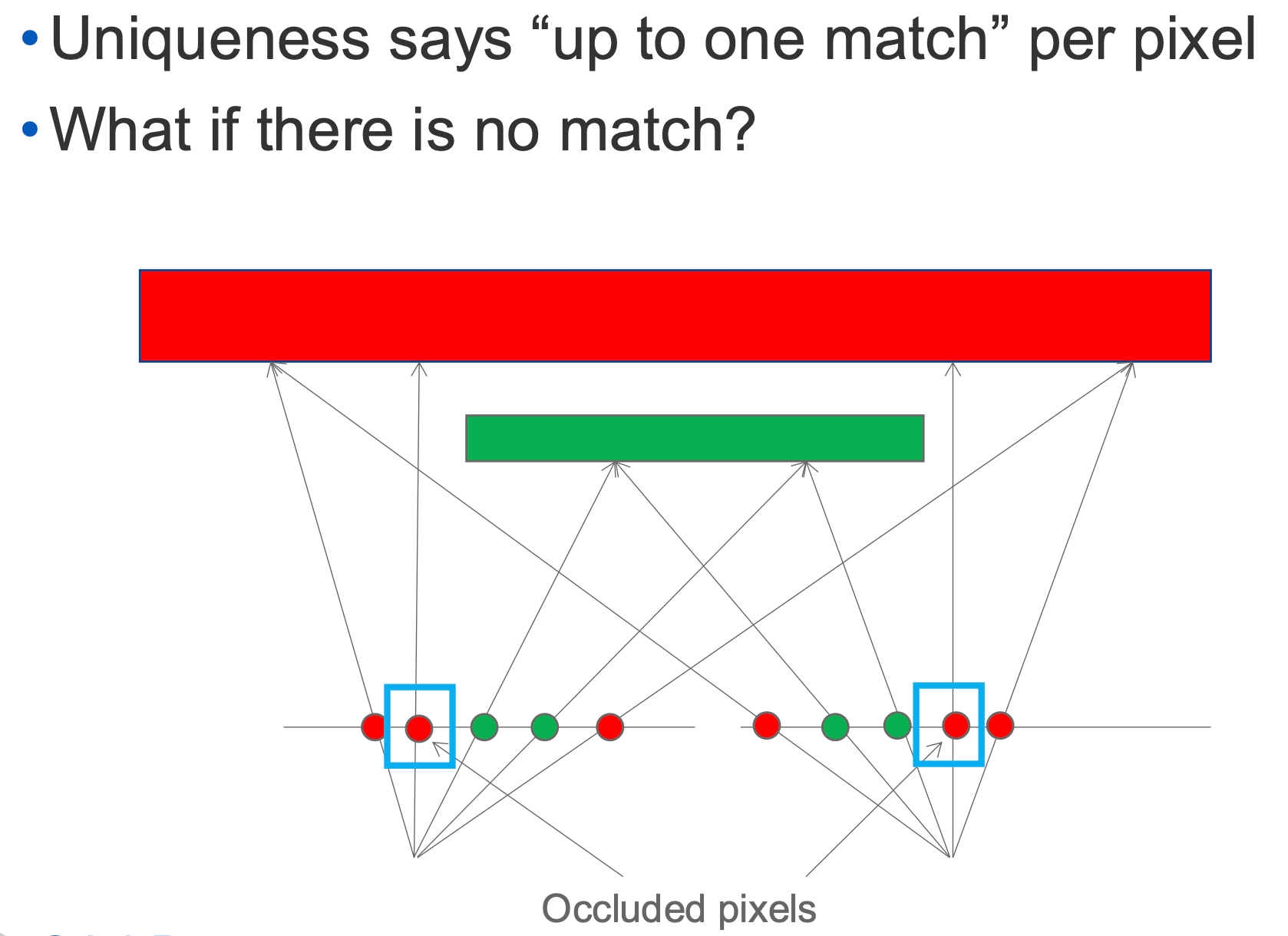

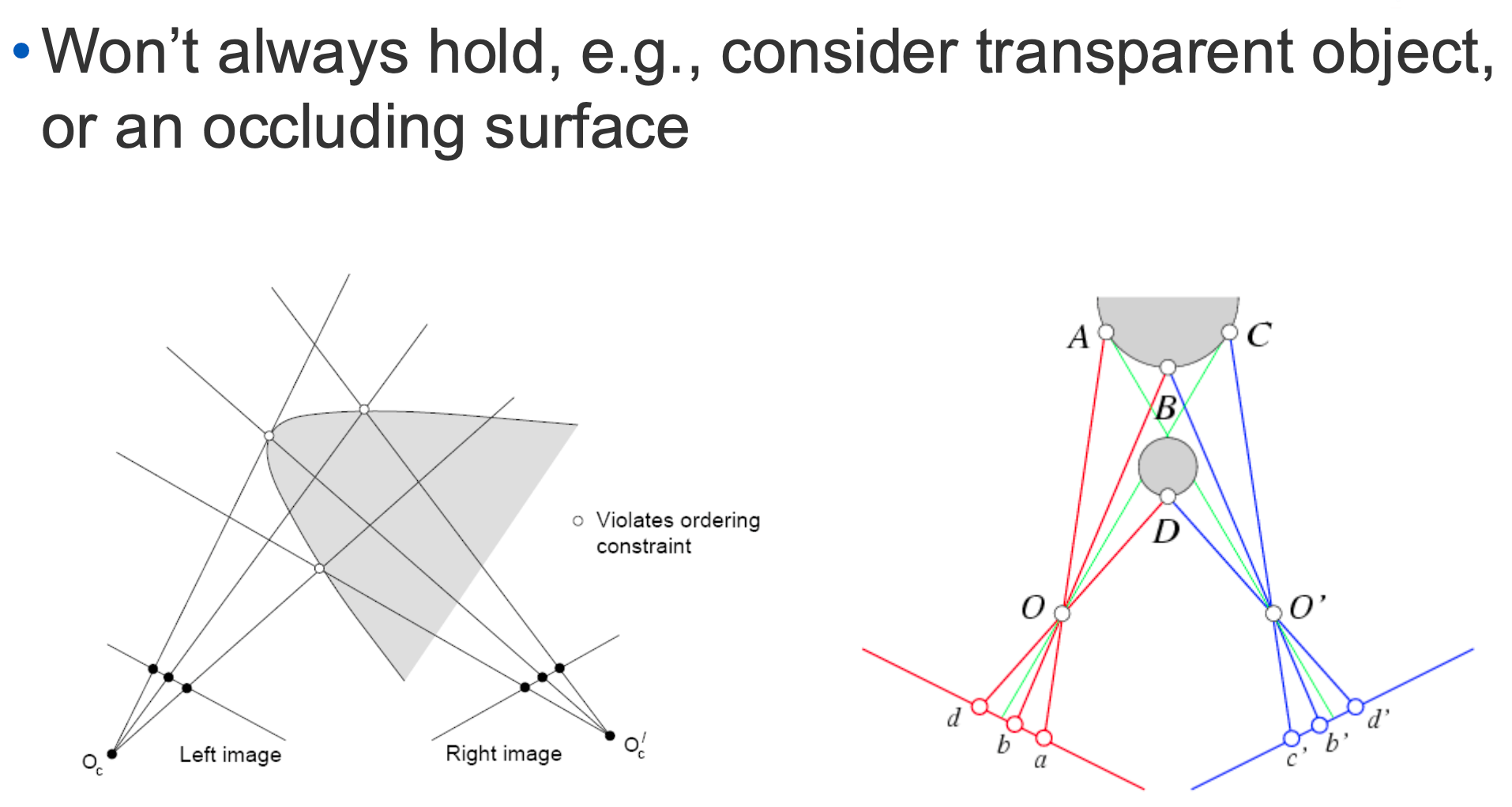

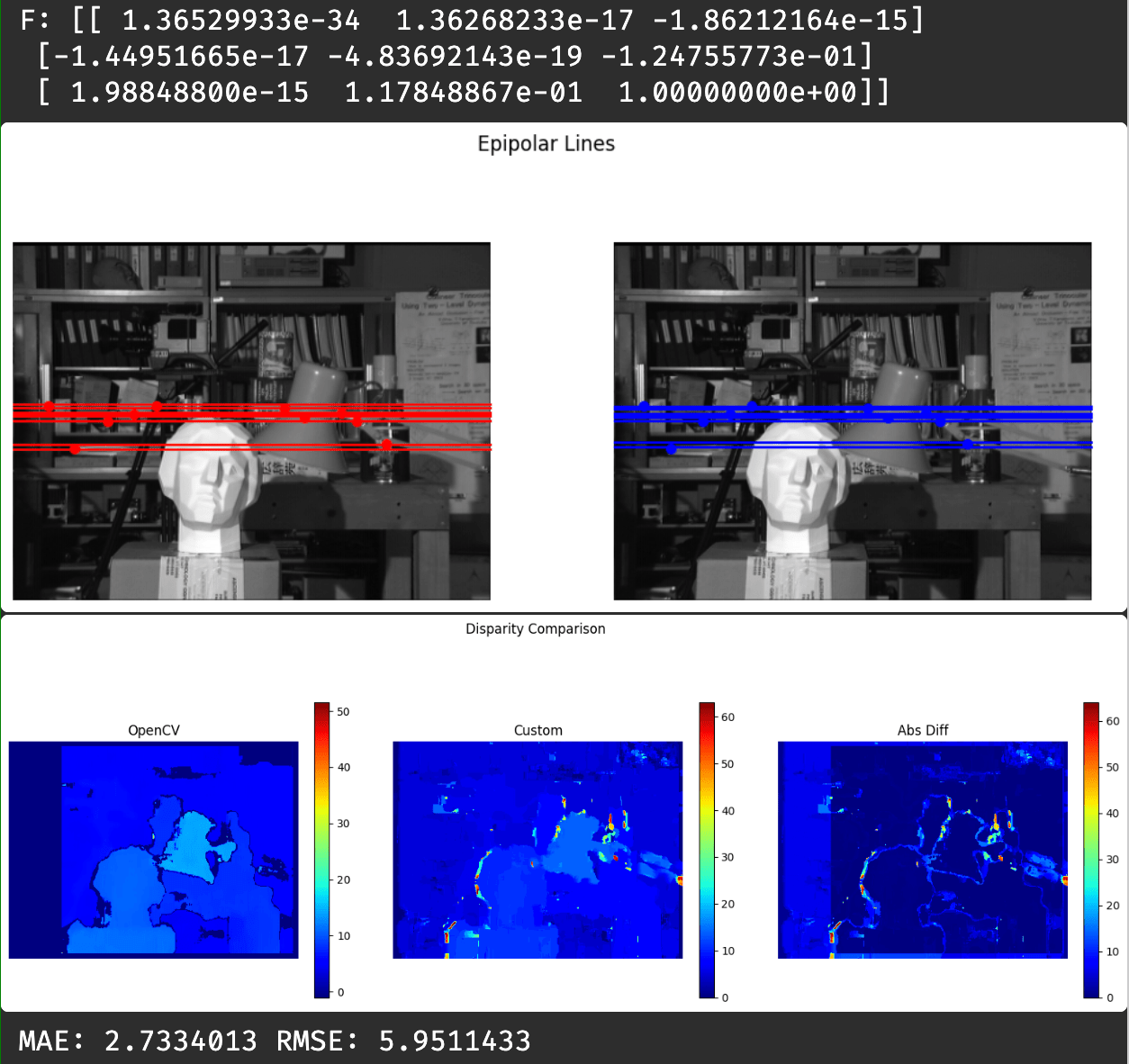

Given a point in one of the images, where could its corresponding points be in the other images?

Motion (Camera Calibration):

Given a set of corresponding points in two or more images, compute the camera parameters.

Structure (Depth in the Scene):

Given projections of the same 3D point in two or more images, compute the 3D coordinates of that point.

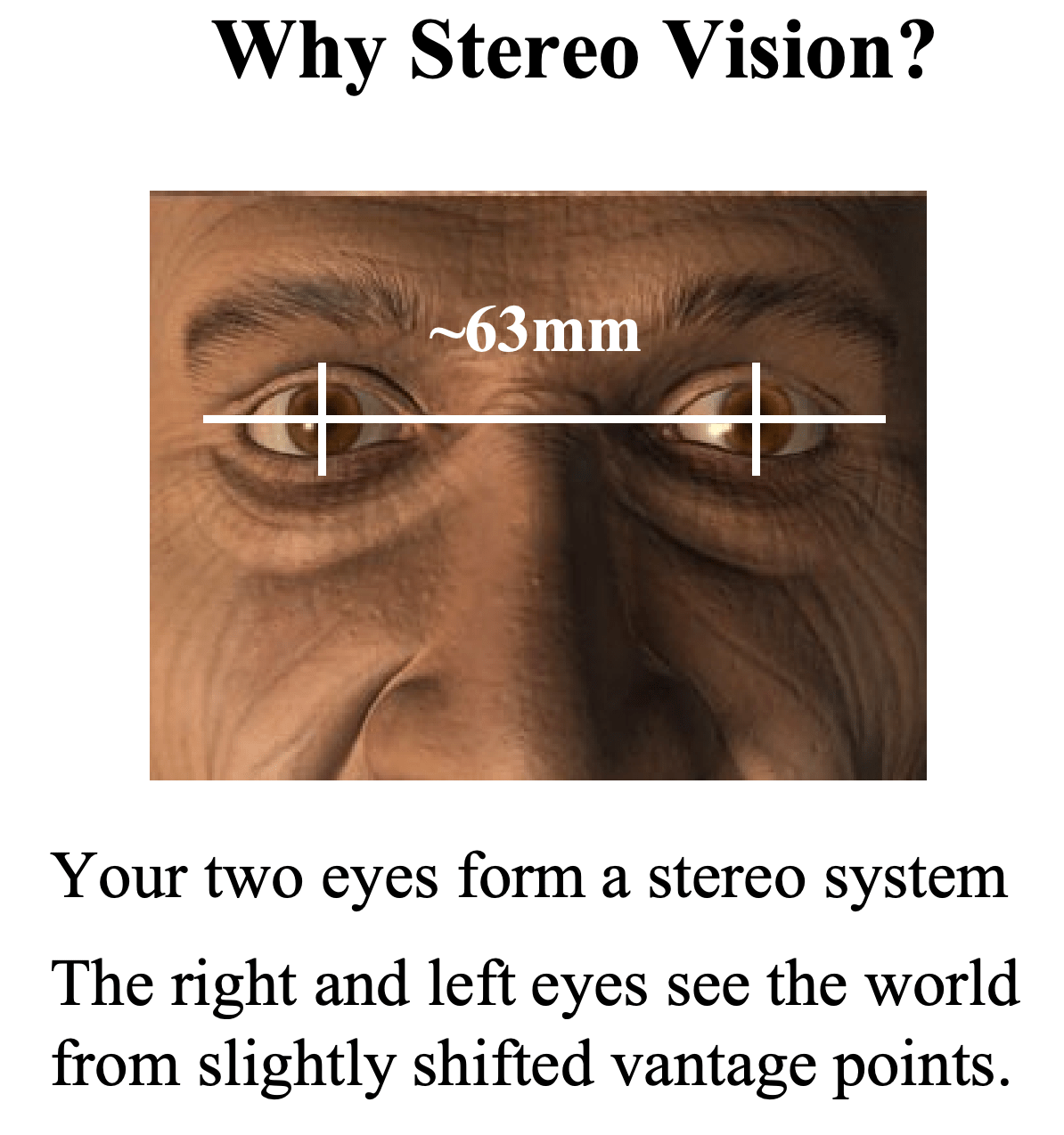

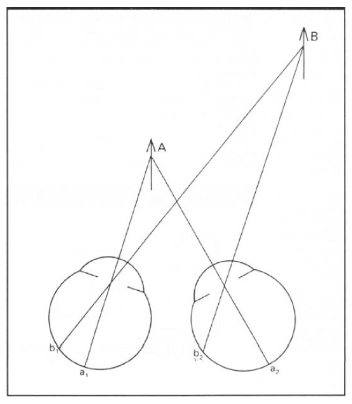

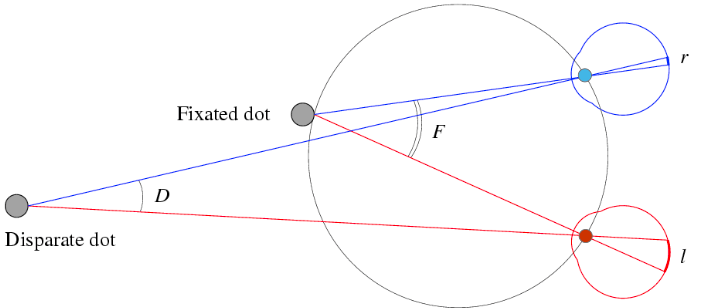

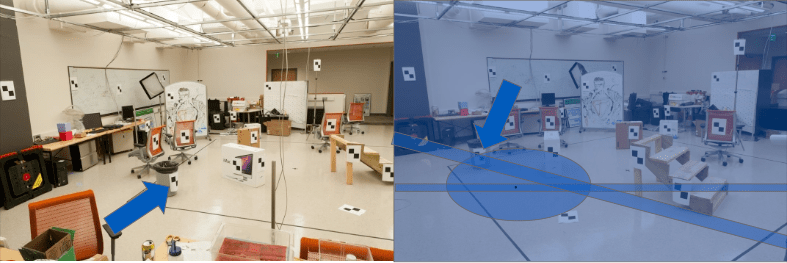

Human eyes fixate on point in space - rotate so that corresponding images form in centers of fovea.

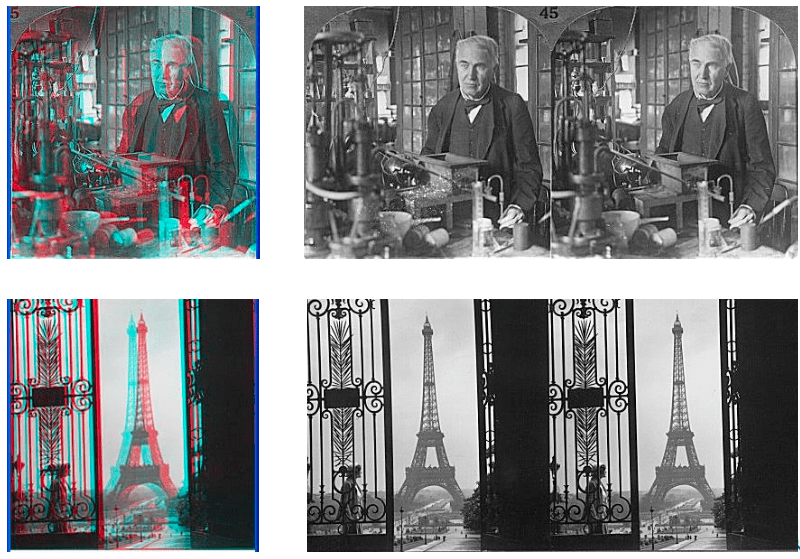

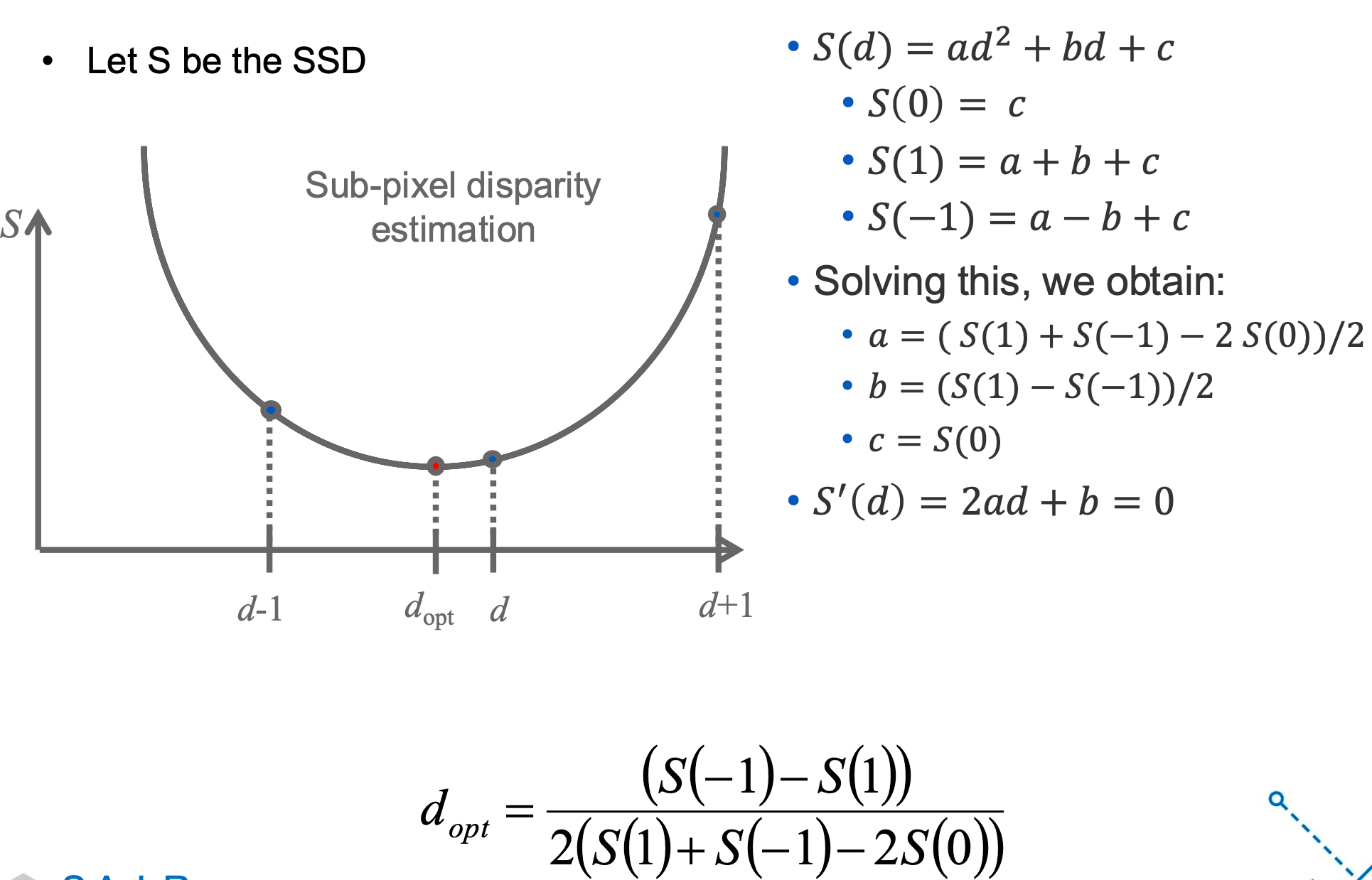

Disparity occurs when eyes fixate on one object; that appears at different visual angles.

We will be using Disparity for depth estimation.

We will assume for now that these parameters are given and fixed.

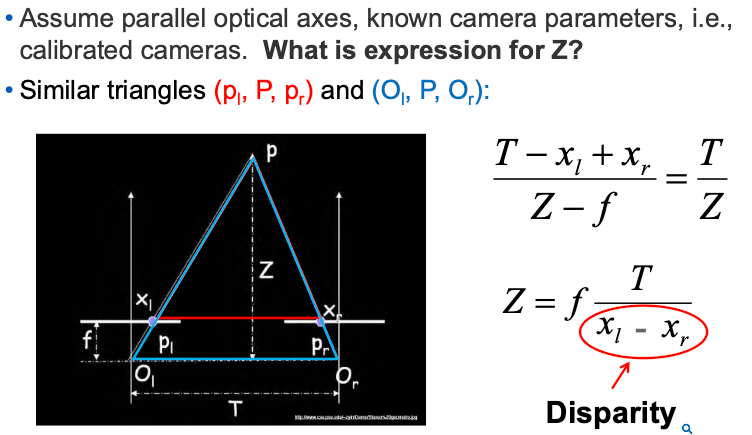

Assuming parallel optical axes, known camera parameters (i.e., calibrated cameras).

General

Case

General

Case

\( 2T + 3R\)

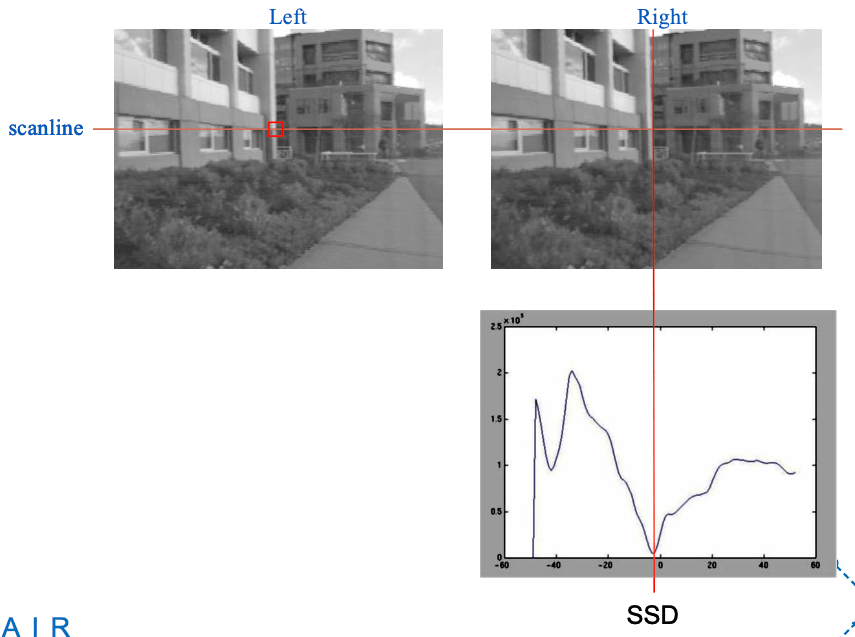

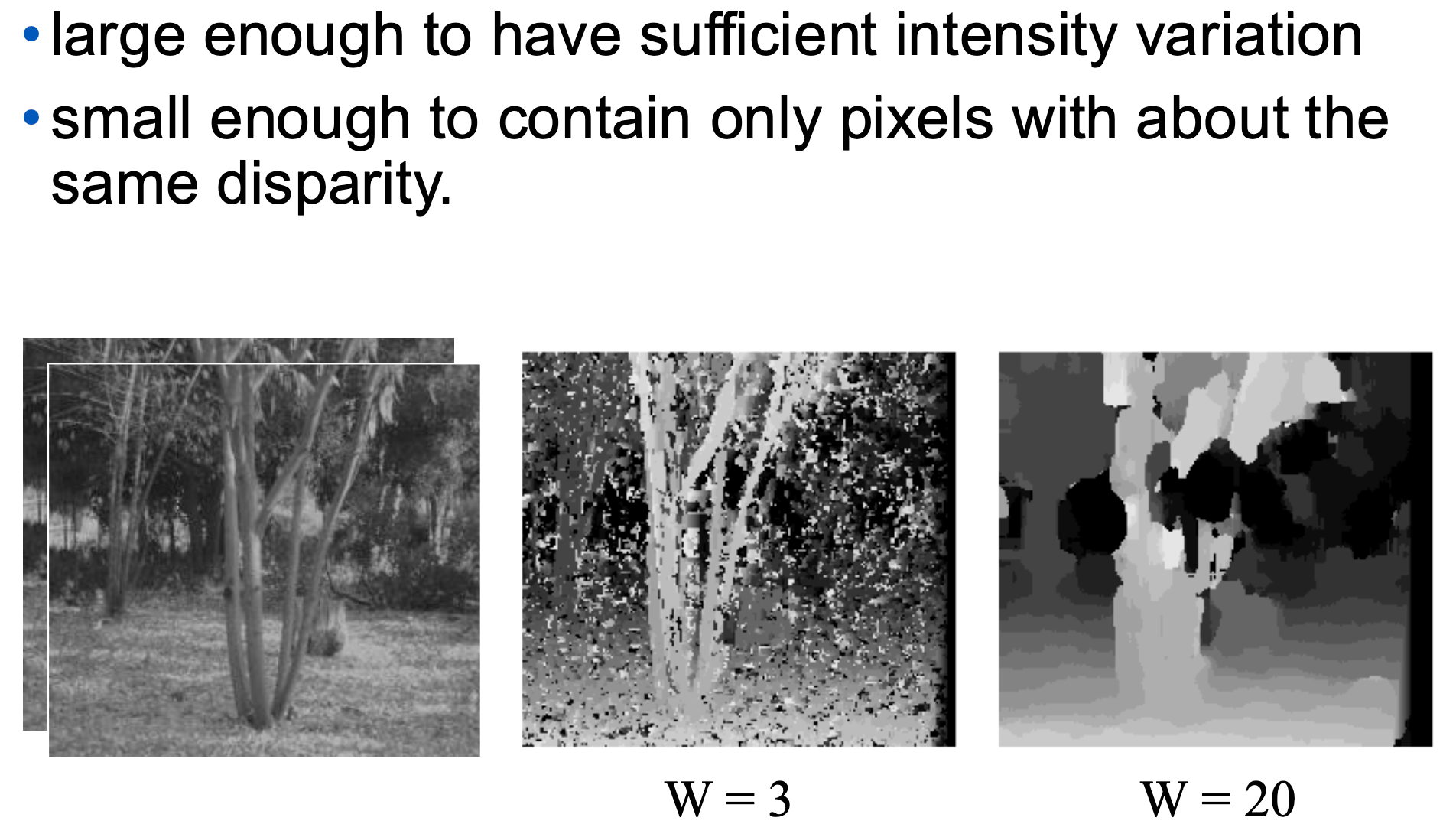

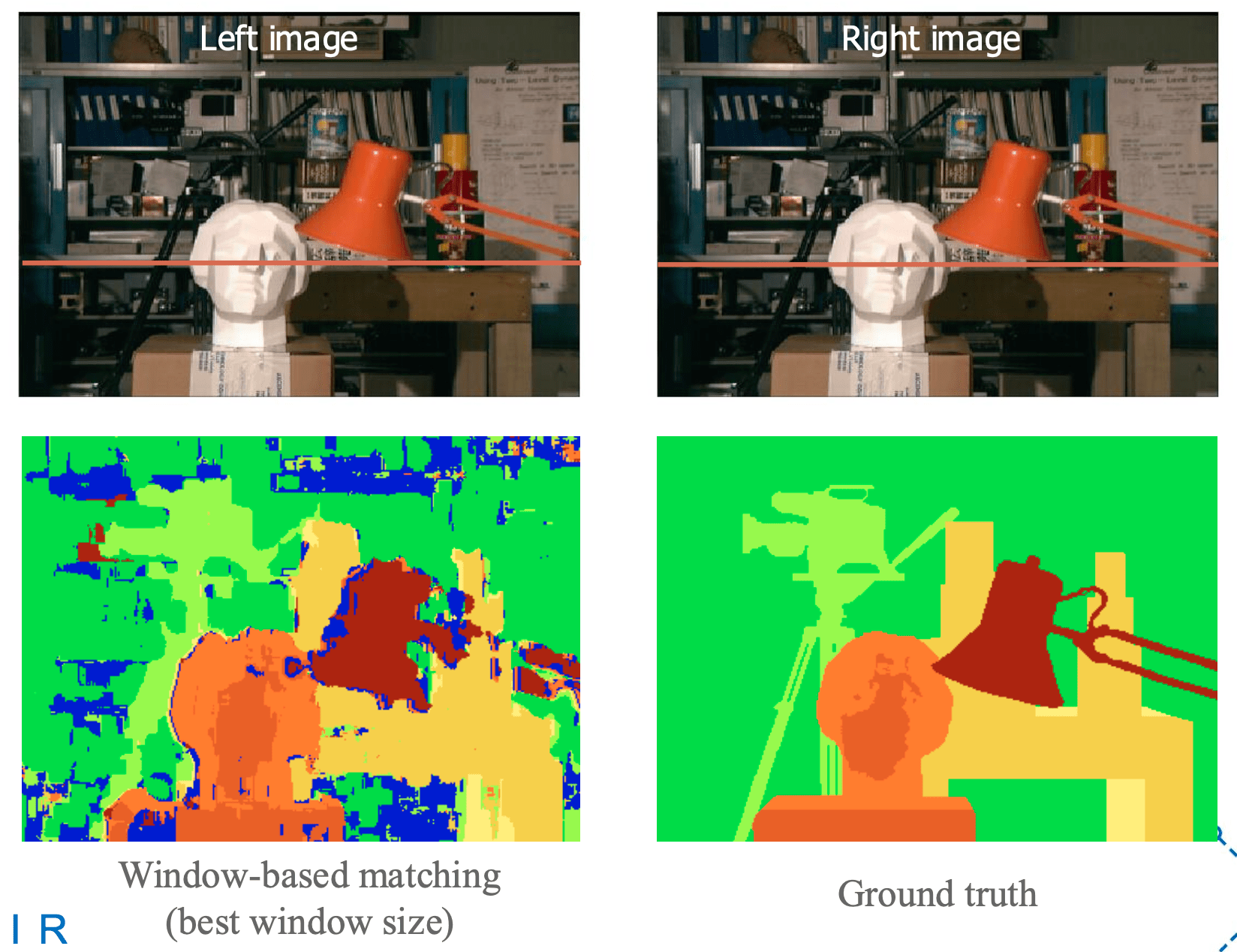

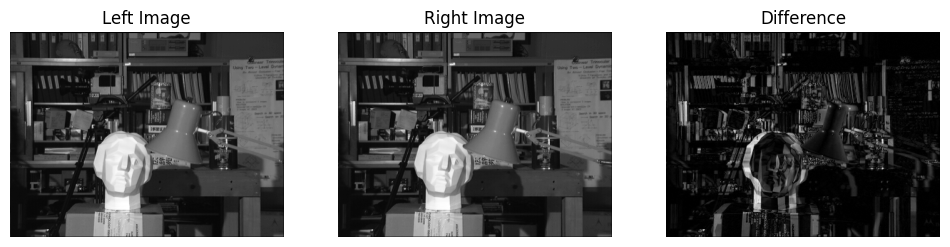

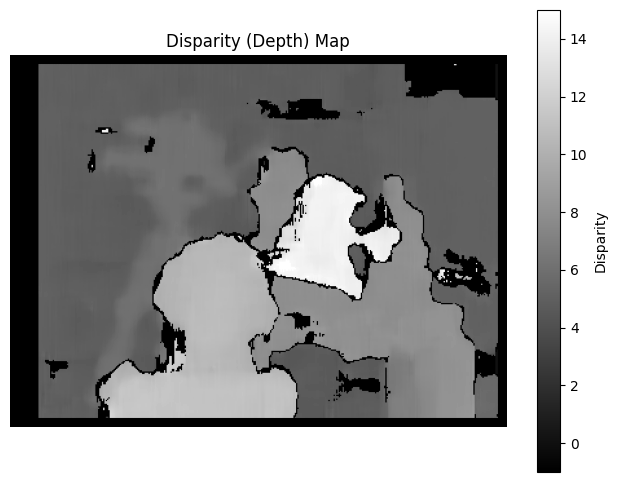

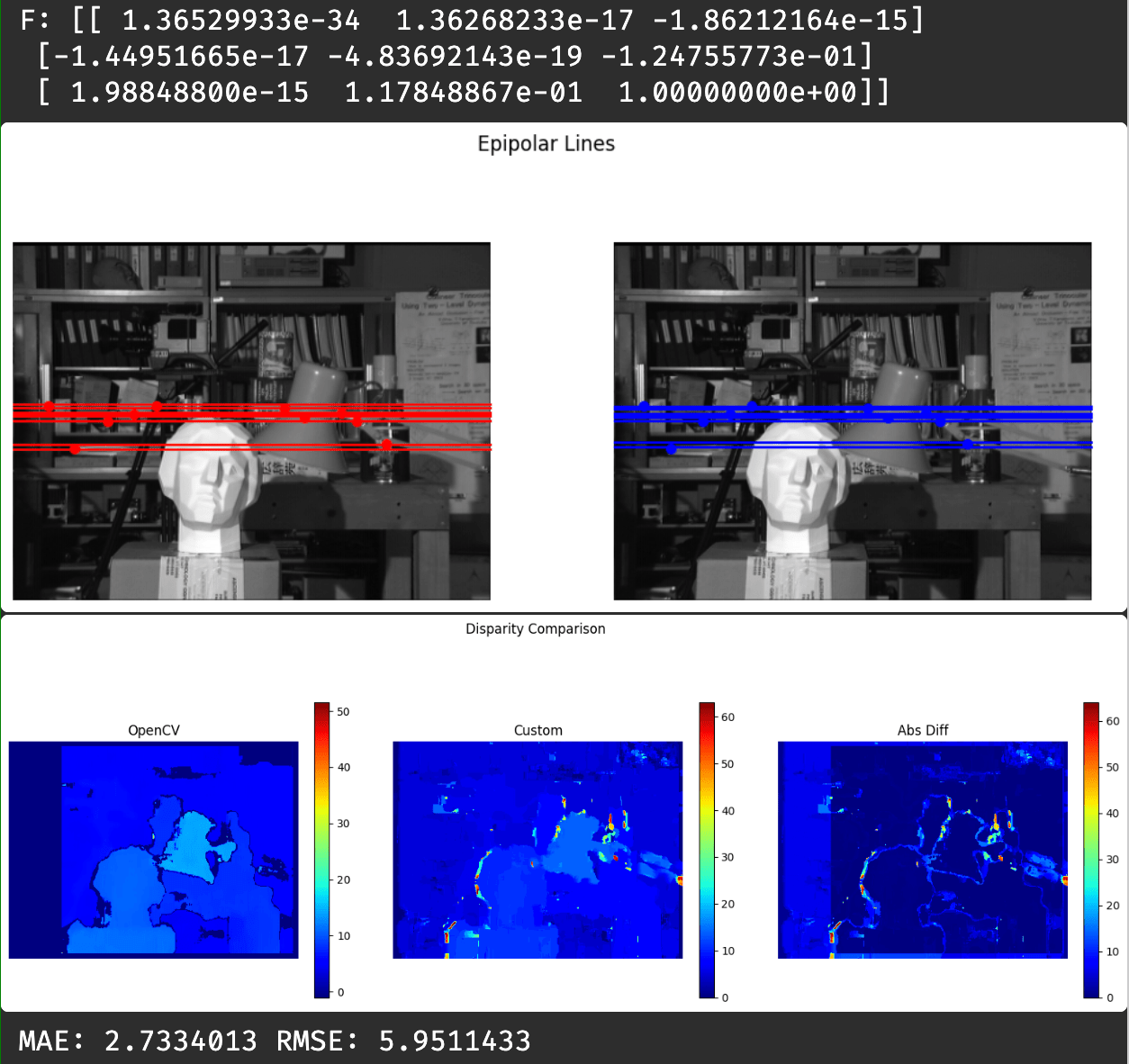

Use Live Code in the course website to play around with the implementation of depth estimation using window search.

Depth map \( \rightarrow \)

Use Live Code in the course website to play around with the implementation of depth estimation using window search.

Use Live Code in the course website to play around with the implementation of depth estimation using window search.

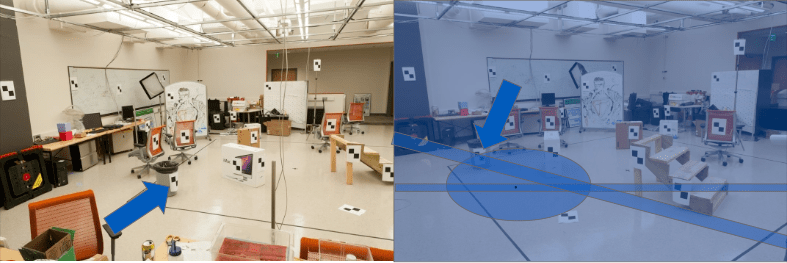

- [ICCV 2025] StereoGen: Towards Open-World Generation of Stereo Images and Unsupervised Matching.

- Depth Anything V2: https://depth-anything-v2.github.io/

- Video Depth Anything: https://huggingface.co/spaces/depth-anything/Video-Depth-Anything

Lecture 6, 7, 8: Stereo Vision and Depth Estimation

By Naresh Kumar Devulapally

Lecture 6, 7, 8: Stereo Vision and Depth Estimation

- 135